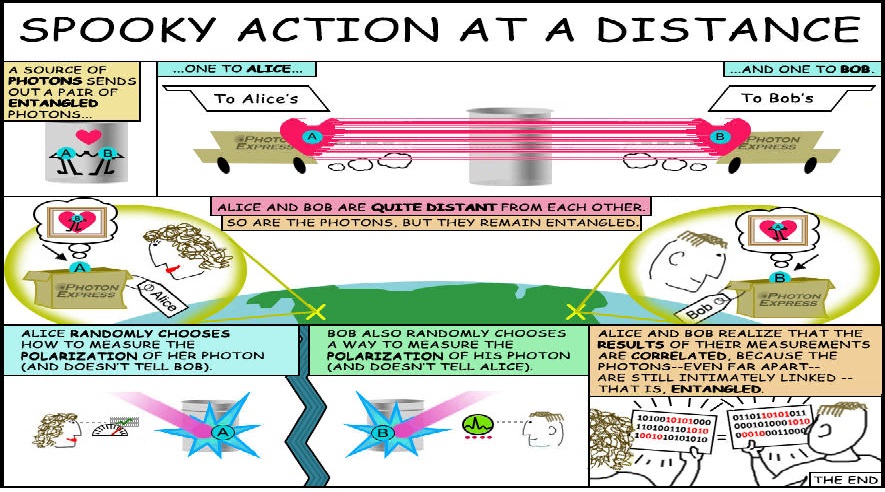

The quantum entanglement story began in 1935 with the EPR paper. That’s where Einstein, Podolsky, and Rosen said quantum mechanics must be incomplete, because it predicts a system in two different states at the same time. Later that year Bohr replied saying spooky action at a distance could occur. Then Schrödinger came up with a paper where he talked of entanglement, a paper where he used his cat to show how ridiculous the two-state situation was, and a paper saying he found spooky action at a distance to be repugnant. He also compared it to Voodoo magic, where a savage “believes that he can harm his enemy by piercing the enemy’s image with a needle”.

Credits: NASA/JPL-Caltech, see Particles in Love: Quantum Mechanics Explored in New Study | NASA

Credits: NASA/JPL-Caltech, see Particles in Love: Quantum Mechanics Explored in New Study | NASA

Then in 1952 Bohm came up with two hidden variables papers which retained the spooky action at a distance. He also came up with the EPRB experiment, which was the subject of a paper in 1957. Then in 1964 Bell came up with papers On the Problem of Hidden Variables in Quantum Mechanics and On the Einstein Podolsky Rosen Paradox. Bell said the issue was resolved in the way Einstein would have liked least, and gave a mathematical “proof” which is usually called Bell’s theorem. Then in 1969 we had the CHSH paper on a Proposed Experiment to Test Local Hidden-Variable Theories by Clauser et al. Then in 1972 we had Clauser and Freedman’s Experimental Test of Local Hidden-Variable Theories. Then in 1981 we had Experimental Tests of Realistic Local Theories via Bell’s Theorem by Aspect et al, followed by two further papers in 1982. Then in 1998 we had Violation of Bell’s inequality under strict Einstein locality conditions by Zeilinger et al.

Convinced the physics community in general that local realism is untenable

All these so-called Bell test experiments used photons and polarizing filters. They featured ever-increasing complexity to cater for so-called loopholes, and are said to have “convinced the physics community in general that local realism is untenable”. So much so, that the 2022 physics Nobel Prize was awarded to Clauser, Aspect, and Zeilinger “for experiments with entangled photons, establishing the violation of Bell inequalities and pioneering quantum information science”. This Nobel prize was awarded exactly a hundred years after Bohr was awarded a Nobel prize in 1922. There’s just one problem. It’s all bullshit.

Quantum bullshit from the man who said reality does not exist until you measure it

The bullshit started in 1935 with Bohr’s reply to the EPR paper. Bohr’s paper was rambling, off-topic, and pretentious. He didn’t address the issue at all. Instead he gave a lofty lecture on complementarity and the double slit experiment, then energy and time, and space and time. In the middle of all the condescending bluster he slipped in this: “an influence on the very conditions which define the possible types of predictions regarding the future behavior of the system”. That’s spooky action at a distance. He also slipped in this: “we see that the argumentation of the mentioned authors does not justify their conclusion”. No, we don’t. We see Bohr ducking the issue. We see quantum bullshit from the man who said reality does not exist until you measure it. From the man who said the Moon isn’t there if nobody looks. From the man who said quantum mechanics surpasseth all human understanding, and you can never hope to understand it, so don’t even try. Bohr even had the gall to say the “quantum-mechanical description of physical phenomena would seem to fulfill, within its scope, all rational demands of completeness”. Even though quantum mechanics doesn’t tell us what a photon is, how pair production works, or what the electron is. Even though it dismisses electron spin as an abstract notion via a spinning faster than light non-sequitur. Despite the hard scientific evidence of the Einstein-de Haas effect, Larmor precession, and the wave nature of matter. And do note the use of the word rational. Bohr was just so patronising. You will not find one person who has a good word to say about his paper. Even Clauser said he found Bohr’s ideas “muddy and difficult to understand”.

A whole layer cake of bullshit

The next load of bullshit came from Bohm with his 1952 papers. I say that because people talk about de Broglie-Bohm theory, and de Broglie won his 1929 Nobel Prize for the discovery of the wave nature of electrons. Did Bohm somehow miss the bit that said matter is, by its nature, a wave motion? He couldn’t have. Which means he was going off on one when he said wavefunction was a mathematical representation of a real field which exerts a force on a particle akin to an electromagnetic field. Especially when he then said it can “transmit uncontrollable disturbances instantaneously from one particle to another”. In one fell swoop Bohm had contradicted the wave nature of matter, dug up spooky action at a distance, and then pulled the stake from its heart. He had resurrected a myth and set it free to stalk the land. Not only that, but in 1957 he ignored the original EPR position v momentum debate, and said spooky action at a distance applied to the Stern-Gerlach measurements of spin ½ particles.

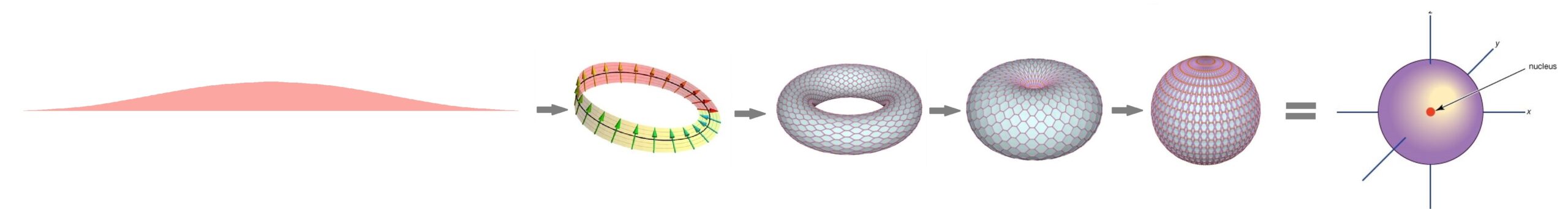

Spindle torus animation by Adrian Rossiter, see antiprism.com, resized and reversed by me via EZgif

He said “if the x component is definite, then the y and z components are indeterminate”. That makes as much sense as saying the South side of a whirlpool is definitely spinning from West to East, but the spin on the East side is indeterminate. Conservation of angular momentum says it isn’t. But Bohm was so full of bullshit he even said “in any single case, the total angular momentum will not be conserved”. On top of that, he said it was only practicable to test the EPR paradox via “the polarization properties of correlated photons”. Photon polarization is nothing like spin ½. This is just a whole layer cake of bullshit from a wannabee mystic who didn’t understand the photon, pair production and annihilation, the electron the positron, or anything else.

A barrelful of blarney

The next shedload of bullshit came in Bell’s 1964 paper. It was a career-promoting Einstein-was-wrong hit piece. A hit piece that peddled Copenhagen mysticism and was as clear as mud. Bell didn’t address the physics of spin ½. Instead he gave a rambling smoke-and-mirrors mathematical “proof” which culminated in him pulling a rabbit out of a hat and making the grandiose claim that “the signal involved must propagate instantaneously”. How on Earth can anybody pretend to prove that via mere mathematics? With no experimental evidence at all? Bell’s whole paper was a barrelful of blarney from a 1960s particle physicist who hadn’t read any of the realist papers from the 1920s and 1930s. In the introduction, he gave some history and referred to Bohm and others. Then he made the claim that non-locality is characteristic of any theory which “reproduces exactly the quantum mechanical predictions”. In section II he talked about an entangled pair of spin ½ particles. He defined terms ![]() and

and ![]() to describe a ±1 Stern-Gerlach measurement on particle 1 at magnet angle a, and a similar measurement on particle 2 at magnet angle b. His equation 2 said the expectation value for such measurements was

to describe a ±1 Stern-Gerlach measurement on particle 1 at magnet angle a, and a similar measurement on particle 2 at magnet angle b. His equation 2 said the expectation value for such measurements was ![]() , where λ denoted a set, A being a result of measuring particle 1 and B being a result of measuring particle 2. That’s A for Alice and B for Bob.

, where λ denoted a set, A being a result of measuring particle 1 and B being a result of measuring particle 2. That’s A for Alice and B for Bob.

Introducing an unwarranted linear relationship

Bell also said a hidden-variable expectation value should equal the quantum-mechanical singlet state expectation value ![]() , but that “it will be shown that this is not possible”. Then in section III he said

, but that “it will be shown that this is not possible”. Then in section III he said ![]() = cos θ. There’s nothing wrong with that. But there’s plenty wrong with his hidden variable in the form of “a unit vector λ, with a uniform probability distribution”. That’s a straw man hidden variable. It’s nothing like the real hidden variable. The real hidden variable is that spin ½ is a real rotation in two orthogonal directions, just like Darwin said in 1928:

= cos θ. There’s nothing wrong with that. But there’s plenty wrong with his hidden variable in the form of “a unit vector λ, with a uniform probability distribution”. That’s a straw man hidden variable. It’s nothing like the real hidden variable. The real hidden variable is that spin ½ is a real rotation in two orthogonal directions, just like Darwin said in 1928:

Sinusoidal strip by me, GNUFDL spinor image by Slawkb, Torii by Adrian Rossiter, S-orbital from Encyclopaedia Britannica

Stern-Gerlach measurements yielding +1 and -1 just don’t do it justice, especially since a magnetic field causes Larmor precession. Those Stern-Gerlach magnets don’t “measure” spin, they just give you a pointer to it, and whilst doing so, they alter it. So that’s another hidden variable. So Bell’s section III is useless. However section IV is worse than useless, because that’s where the sleight-of-hand lies. In equation 13 Bell said ![]() , which means your A and B sets of measurements will be opposite if your magnet angles are the same. Then in his equation 14, he treated a set of measurements as a simple number, and said the expectation value

, which means your A and B sets of measurements will be opposite if your magnet angles are the same. Then in his equation 14, he treated a set of measurements as a simple number, and said the expectation value ![]() . That’s saying you can get the same results using only your Alice measurements, because they’re the opposite of your Bob measurements. That means you’re introducing an unwarranted linear relationship.

. That’s saying you can get the same results using only your Alice measurements, because they’re the opposite of your Bob measurements. That means you’re introducing an unwarranted linear relationship.

When Bell snuck in that linear relationship, he kicked a cosine under the rug

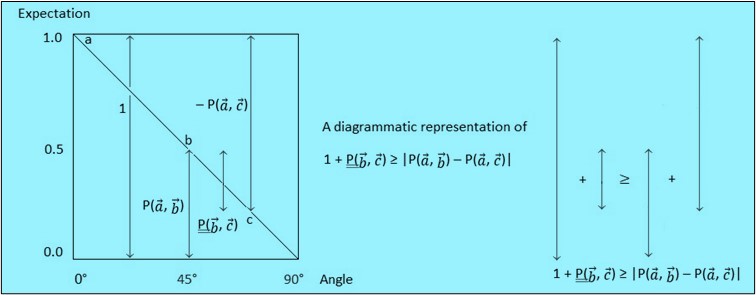

Then Bell threw in another measurement at angle c, and after a bit of jiggery-pokery came up with expression 15, which is ![]() . That’s Bell’s inequality. As to what it means, let me draw you a picture:

. That’s Bell’s inequality. As to what it means, let me draw you a picture:

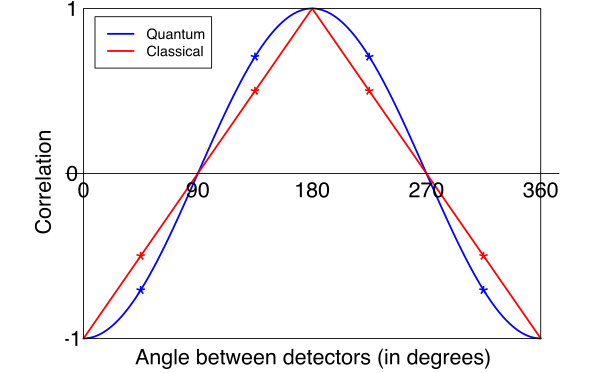

One plus your bc term is greater than or equal to your ab term plus your minus ac term, which isn’t really negative. Your expectations add up in a sensible fashion, because there is no magic. You can ignore the rest of Bell’s maths because of this statement: “Nor can the quantum mechanical correlation (3) be arbitrarily closely approximated by the form (2). The formal proof of this may be set out as follows”. It’s not important. What’s important is that when Bell snuck in that linear relationship, he kicked a cosine under the rug. That resulted in a straw man “classical prediction” that takes a linear form. Hence Bell’s inequality is usually illustrated with a picture like the one below, with a red straight-line classical prediction, and a blue curved-line quantum mechanical prediction:

CCASA image by Richard Gill, see Bell’s theorem – Wikipedia. Caption: The best possible local realist imitation (red) for the quantum correlation of two spins in the singlet state (blue), insisting on perfect anti-correlation at zero degrees, perfect correlation at 180 degrees. Many other possibilities exist for the classical correlation subject to these side conditions, but all are characterized by sharp peaks (and valleys) at 0, 180, 360 degrees, and none has more extreme values (±0.5) at 45, 135, 225, 315 degrees. These values are marked by stars in the graph, and are the values measured in a standard Bell-CHSH type experiment: QM allows ±1/√2 = 0.707, local realism predicts ±0.5 or less.

CCASA image by Richard Gill, see Bell’s theorem – Wikipedia. Caption: The best possible local realist imitation (red) for the quantum correlation of two spins in the singlet state (blue), insisting on perfect anti-correlation at zero degrees, perfect correlation at 180 degrees. Many other possibilities exist for the classical correlation subject to these side conditions, but all are characterized by sharp peaks (and valleys) at 0, 180, 360 degrees, and none has more extreme values (±0.5) at 45, 135, 225, 315 degrees. These values are marked by stars in the graph, and are the values measured in a standard Bell-CHSH type experiment: QM allows ±1/√2 = 0.707, local realism predicts ±0.5 or less.

This concerns two spin ½ particles with opposite spins. It’s saying the classical prediction for the correlation at 45° is -0.5, whilst the quantum mechanical prediction is -0.707. Special thanks to Physics Forums and Richard Gill for this picture. It’s from an old version of the Wikipedia Bell’s Theorem article. Gill has written a number of papers on the subject of Bell’s theorem.

In Bell’s toy model, correlations fall off linearly

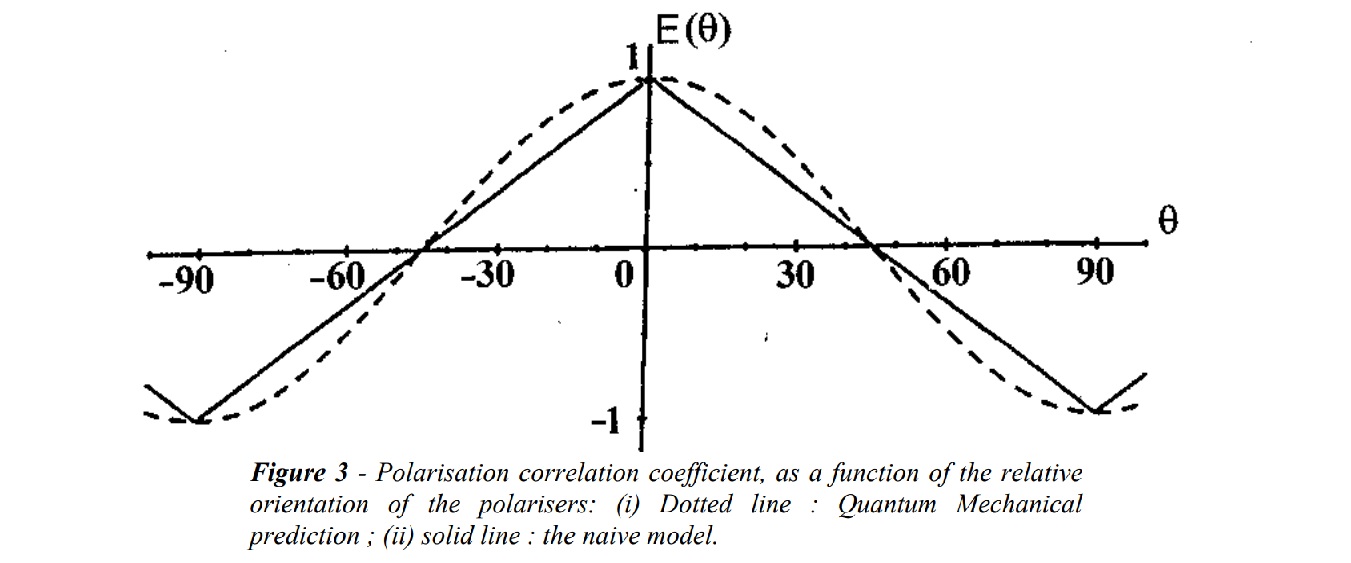

Don’t think Gill made a mistake with this. That heavyweight Stanford article I mentioned includes Abner Shimony amongst the authors, and says this: “In Bell’s toy model, correlations fall off linearly with the angle between the device axes”. In addition, you can see much the same picture in Alain Aspect’s paper Bell’s Theorem: The Naive View of an Experimentalist:

Image by Alain Aspect

Image by Alain Aspect

This concerns two photons with the same polarization, so we don’t have the exact same curve, but it’s still cosinusoidal, and the meaning is the same. Again there’s a straight-line classical prediction, and a curved-line quantum mechanical prediction. Aspect mentioned Malus’s Law, which I’ve mentioned previously. It’s to do with optics, and it’s of crucial interest. So much so that I want to show you something from the Wikipedia Bell’s theorem article:

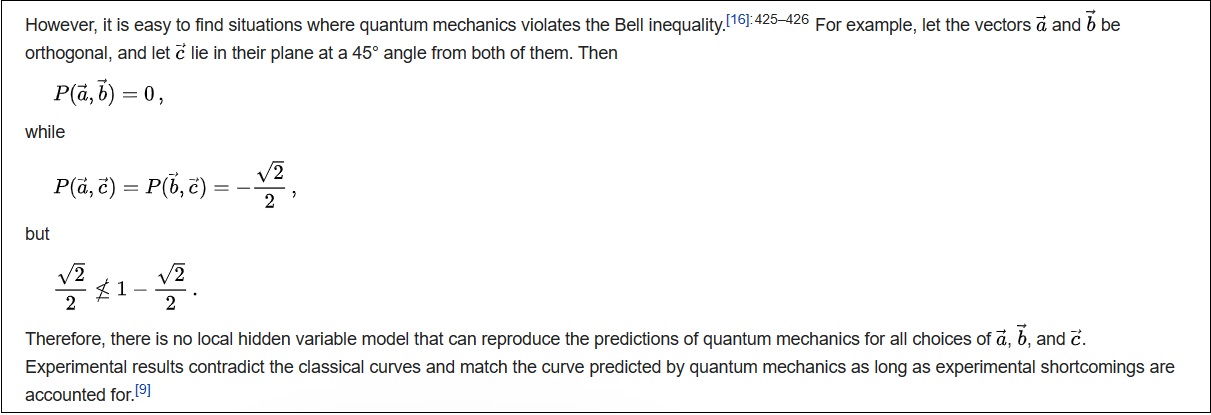

What it’s saying is vectors a and b are orthogonal, whilst vector c is at a 45° angle to both of them. P is a correlation. When you combine a and b, you get a result that’s zero. That’s cos 90°. Combine a and c, or b and c, and you get a result that’s –√2/2, which is –0.707. Note that 0.707 is cos 45°. The claim is that 0.707 is not less than 1 – 0.707 = 0.293, therefore “there is no local hidden variable model that can reproduce the predictions of quantum mechanics”. But there is something that can. Polarizing filters.

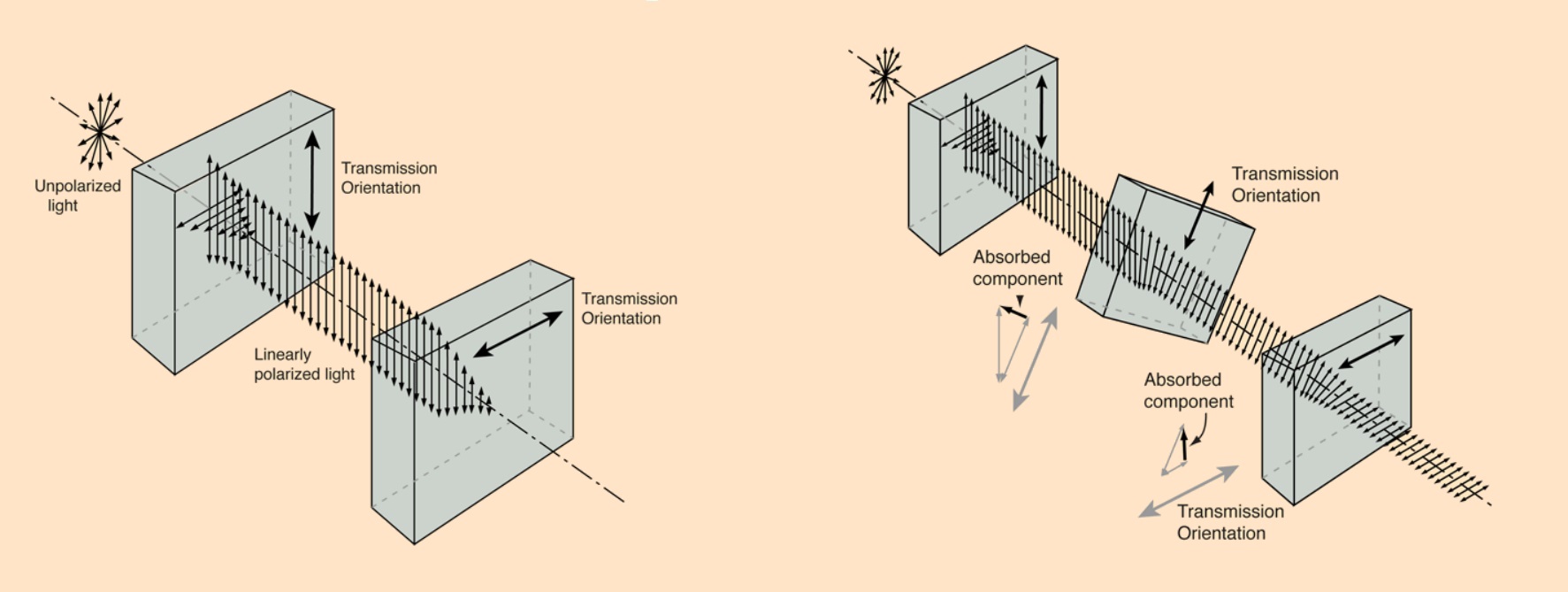

Polarizing filters

Take two polarizing filters and place them in a light beam, one orthogonal to the other. No light gets through. Then take a third filter and put it between the first two at a 45° angle. Now some of the light does get through:

Crossed polarizer images from Rod Nave’s hyperphysics

Crossed polarizer images from Rod Nave’s hyperphysics

The third polarizing filter is sometimes presented as something mysterious, but that mystery is based upon a lack of understanding. Darel Rex Finley explained it well in his 2004 article Third-Polarizing-Filter Experiment Demystified – How It Works. He described how a horizontal polarizing filter doesn’t just let through a horizontally polarized light wave. It lets through the horizontal component of a light wave polarized at some angle, also rotating it so that it ends up horizontal. If the light wave is horizontally polarized, it lets it all through. If the light wave is polarized at 22.5° it lets almost all of it through whilst rotating it by 22.5°. If the light wave is polarized at 45° it lets some of it through whilst rotating it by 45°. If the light wave is polarized at 67.5° it lets less of it through whilst rotating it by 67.5°. If the light wave is polarized at 90°, it lets none of it though. Here’s Finley’s depictions:

Polarization images by Darel Rex Finley, see Third-Polarizing-Filter Experiment Demystified – How It Works

Polarization images by Darel Rex Finley, see Third-Polarizing-Filter Experiment Demystified – How It Works

There’s an adjacent-over-hypotenuse cosine function at work here. Cos 0° = 1, cos 22.5°= 0.923, cos 45° = 0.707, cos 67.5° = 0.382, cos 90° = 0. As Finley said, there are no spooky quantum properties, and there’s nothing mysterious about it. Instead it’s a straightforward chain of cause and effect yielding rational, comprehensible results. It’s closely related to Malus’s law, which gives the intensity of the transmitted light as I = I₀ cos²θ. There’s a square in there because “the transmitted intensity is proportional to the amplitude squared”. When θ is 0°, all the light gets through, when θ is 90°, none of the light gets through, when θ is 45°, half the light gets through because cos²θ is 0.707 x 0.707 = 0.5. Note that the same is true for a beam of unpolarized light going through a polarizing filter. It’s a mixture of polarizations, and half the light gets through.

It describes a cosine-like curve because that’s how polarizing filters work

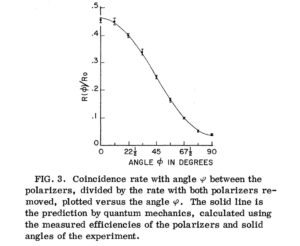

It’s similar when your polarizing filters are at opposite ends of an entanglement experiment. You emit a random assortment of photon pairs towards your polarizing filters at A and B, and on average half of them get through A. If your photon pair have the same polarization and your polarizing filters have the same alignment, then if you detect a photon at A you will detect a photon at B. If you rotate filter B round by 90° and repeat, then if you detect a photon at A you will not detect a photon at B. There’s a sliding scale between the two extremes, only it isn’t linear. It describes a cosine-like curve because that’s how polarizing filters work. When you know this, articles like Bell’s Theorem explained, Bell’s theorem and Bell’s Theorem with Easy Math seem totally lame. So does Clauser and Freedman’s experiment. Take another look at their results. Freedman’s inequality is δ = | R(22½°) / R₀ – R(67½°) / R₀ | – ¼ ≤ 0. That’s where R is the coincidence rate for two-photon detection with both polarizing filters in place, and R₀ is the coincidence rate for two-photon detection with no polarizing filters in place. The former divided by the latter is about 0.5 when the two polarizers have the same orientation and so angle ϕ is zero. This is what you’d expect, because on average half the light gets through polarizer A. It isn’t quite 0.5 because polarizers aren’t perfect:

Image from Experimental Test of Local Hidden-Variable Theories by Clauser and Freedman

Image from Experimental Test of Local Hidden-Variable Theories by Clauser and Freedman

Then as you rotate polarizer B, the coincidence rate drops off, following a cosine-like curve. Looking at the plot above, at 22½° it’s about 0.4, at 67½° it’s about 0.1, so δ = 0.4 – 0.1 – 0.25 = 0.05. Turning to Malus’s law, the 0.5 relates to a cos² 0° = 1. So for cos² 22.5° we have 0.923 x 0.923 = 0.852 which we divide by 2 to give 0.426. Then for cos² 67.5° we have 0.382 x 0.382 = 0.146 which we divide by 2 to give 0.073. So δ = 0.426 – 0.073 – 0.25 = 0.103. That isn’t less than or equal to zero, so Freedman’s inequality is broken. But not because of some magical mysterious spooky action at a distance. Because of the way polarizers work.

Those photons weren’t entangled at all

Not only that, but Clauser and Freedman’s photons weren’t even entangled. The wavelengths were 581 nanometres and 406 nanometres. They weren’t the same wavelength because the photons were not produced at the same time. Clauser and Freedman referred to an intermediate state lifetime of 5 nanoseconds. Those photons were produced 5 nanoseconds apart. A photon travels 1.5 metres in 5 nanoseconds. By the time the second photon was emitted, the first photon was through the polarizer. Those photons weren’t entangled at all. Aspect used the same calcium cascade. So his photons weren’t entangled either. That’s why you can do a Bell-type Polarization Experiment With Pairs Of Uncorrelated Optical Photons, and get the same result. This is why Al Kracklauer was talking about Malus’s law twenty years ago. And why Dean L Mamas wrote a no-nonsense “realist” paper called Bell tests explained by classical optics without quantum entanglement. It’s brief and to the point, saying this: “The observed cosine dependence in the data is commonly attributed to quantum mechanics; however, the cosine behavior can be attributed simply to the geometry of classical optics with no need for quantum mechanics”. Quite. I found out about this because Gill wrote a paper in 2022 dissing Mamas by moving the goalposts and saying the quantum mechanical cosine is twice the classical cosine. Gill used the trick of changing both polarizer angles. We are dealing with correlations here, so it’s the difference between the polarizer angles that matters, not the difference of each from some reference angle. Cos 2 θ is not equal to 2 cos θ. It’s like what Joy Christian said, the terms do not commute.

Is Spookiness Under Threat?

Note that Gill also wrote a paper dissing Joy Christian, who had the temerity to challenge spooky at a distance in 2007. See Mark Buchanan’s New Scientist article Quantum Entanglement: Is Spookiness Under Threat? It referred to Christian’s paper Disproof of Bell’s Theorem by Clifford Algebra Valued Local Variables. After that, Christian got cancelled for challenging quantum entanglement. He was dropped by the Perimeter Institute and Oxford University, because of people like Gill, who talks about Bell denialists. Scott Aaronson used the same word when he was dissing Christian. He also said he’d “decided to impose a moratorium, on this blog, on all discussions about the validity of quantum mechanics in the microscopic realm, the reality of quantum entanglement, or the correctness of theorems such as Bell’s Theorem”. That’s not good.

Physicists Create a Wormhole Using a Quantum Computer

There’s a lot of that sort of stuff in physics. Propaganda and censorship has been par for the course for decades, along with ad-hominem abuse and de-platforming. The tofu-eating wokerati learned it from the tenured academics living a life of ease on the public purse. That’s why you can’t read about people* like Krackleur, Mamas, and Christian in Nature, or in Quanta magazine. Instead you can read how Physicists Create a Wormhole Using a Quantum Computer. Wooo! You can also read how “the power of a quantum computer grows exponentially with each additional entangled qubit”. The problem is that quantum entanglement is bullshit, so entangled qubits are bullshit, so quantum computing is bullshit too. That’s why it still hasn’t delivered anything, and never ever will. What else would you expect when the quantum entanglement story is a fairy tale? It’s a castle in the air, built of bullshit, blarney, and bollocks, all mixed in with straw men and non-sequiturs, all held aloft by hype, hokum and hogwash. It is sophistry peddled by shysters and charlatans. It is cargo-cult pseudoscience promoted by quantum quacks who are spinning you a yarn and playing you for a sucker. The emperor has no clothes, and scientific fraud leaves a nasty taste in the mouth. Remember that the next time you read some jam-tomorrow puff-piece about quantum information science.

* It’s like finding a whole cave wall full of messages from a whole host of different Arne Saknussemms. So many people have been here before. But you only find them once you know you have to search on Entanglement and Malus’s law. Here’s a few:

Disproof of Bell’s Theorem: Further Consolidations by Joy Christian, who refers to Bell’s own derivation of Malus’s law.

A Comparison of Bell’s Theorem and Malus’s Law: Action-at-a-Distance is not Required in Order to Explain Results of Bell’s Theorem Experiments by Austin J Fearnley who said “there is an enforceable duality between results of Malus’ Law experiments and the results from Bell experiments”.

Disentangling entanglement by Antony R Crofts, who said “Violation of the expectation values of Bell’s theorem have been taken to exclude all ‘local realistic’ explanations of quantum entanglement. A simple computer simulation demonstrates that Bell’s inequalities are violated by a local realistic model that depends only on a statistical implementation of the law of Malus in the measurement context”.

Entanglement: A Contrarian View by A F Kracklauer, who said “This comparison is made on the basis of Malus’ Law”.

Polarization Correlation of Entangled Photons Derived Without Using Non-local Interactions by Kurt Jung, who said “In consequence Bell’s inequalities are irrelevant”.

Analyzer Output Correlations Compared for Entangled or Non-entangled Photons by Gary Gordon, who said “Our predicted results for this non-entangled photon case are an exact match to those reported in the literature for the analysis and experimental outcomes for the entangled photon case”.

A New Model for Linear Polarizing Filter by Herb Savage, who said “This new single-photon model for a linear polarizing filter shows the same apparent violation of Bell’s theorem as current loophole-free experiments”.

Fatal_Flaws_in_Bell’s_Inequality_Analyses_Omitting_Malus_Law_and_Wave_Physics_Born_Rule by Arthur S Dixon. who talked about the conscious or negligent omission of Malus’ law in the furtherance of scientific fame and fortune. His reference 43 refers to a paper called Bell’s Theorem and Einstein’s ‘Spooky Actions’ from a Simple Thought Experiment: The Physics Teacher by Fred Kuttner and Bruce Rosenblum who give a reference 11 to Malus’s law on page 128.

Rotational Invariance, Phase Relationships and the Quantum Entanglement Illusion by Caroline H Thompson, along with The tangled methods of quantum entanglement experiments. She said “They appear to be rejecting papers that endanger the accepted dogma”.

Classical Interpretation of EPR- Bell Test Photon Correlation Experiments by Thomas Smid, who said “All experiments are claimed to rule out the existence of Hidden Variables as Bell’s Inequality is violated and the coincidence rate (for initially unpolarized radiation) is observed to follow the well known Malus law”.

Experimental Counterexample to Bell’s Locality Criterion by Ghenadie N. Mardari who said “If polarization measurements are interpreted as transformations, then there is no mystery to explain”.

Visualization of quantum entanglement by Stefan Heusler, who said “Visualization of the angular dependency of the transmission probability pĤ(θ) = |⟨Ĥ|ΩPolarisation⟩|² of vertical polarized light. After many measurements, Malus law is recovered, indicated as red line”.

One of the “best” videos on YouTube about Bell’s inequality (https://youtu.be/sAXxSKifgtU) makes the same exact mistake. First it derives the inequality by assuming equal probability of test outcomes (i.e. a linear probability distribution), then it “proves” the violation introducing an angle-dependent probability (i.e. a non linear distribution)!!!

You should take a look at all the praising comments. Sometimes I wonder if it’s just me or everyone else really has gone mad.

Many thanks Leon. I’ll check that out and probably add it to the list or include it in the article.

Hi John,

Thanks again for the article, I knew I had to ask you to write about the Bell’s related Nobel prices 🙂

And Leon, I also sometimes have the feeling a lot of people have lost their mind, it seems all is upside down. And everybody is parroting the current and “majority-backed” thing without proper questioning (Covid for sure was an eye opener). It seems our world has gotten dumber, it should be the other way round!

In my work I deal with optics and lasers so I knew something was not right. It also appears that these “scientist” hide behind their fancy math and difficult terms and ridicule outsiders that they are not smart enough to understand. And also give the typical reply that it is “proven by measurements”, what they don’t realize is that the setup of the measurement is key in understanding the outcome, wrong assumptions lead to wrong conclusions. And not sure how the saying goes, but if you want a miracle, you will get a miracle …. (The Large Hadron Collider comes to mind, which I always wondered what actually all these Billion euros explained anything additionally?)

New insights and discoveries are always done by outsiders, and these days it is increasingly difficult to swim against the stream (which I believe is going really into the drain or abyss).

Happy to have this place so I don’t loose my mind 🙂

Good stuff, Raf. As far as I know the saying is this: When a Church needs a miracle, a Church gets a miracle. Apparently it goes back to the old days of pilgrims, saints, relics, and weeping statues. I can’t say exactly where I got that from, and will have a look to see if I can dig it up. Either way, it seems to be very true of “Big Science”.

Another thing that is related to this is the delayed choice quantum eraser (actually it is more or less the same as Wheeler’s delayed choice) double split experiment. I also looked into how they did this experiment and actually the result does not show what they conclude from it.

What the detectors at D3 and D4 show is not the typical interference pattern and it comes from the prism-crystal they to split the beam. This guy explains it good: https://yrayezojeqrgexue.quora.com/The-delayed-choice-quantum-eraser-The-delayed-choice-quantum-eraser-is-one-of-the-most-hyped-experiments-in-popular-sci?ch=10&oid=50697428&share=092c6fc8&srid=QT6x&target_type=post

He also mentions Sabine, which to be fair her video went only with little toe into the matter but as always not really explaining why… Nowadays anyway she is more interested in keeping her subscribers entertained.

Many thanks Raf. I’ve always felt cynical about the delayed choice quantum eraser, but I’ve never had a proper look at it. I will now. I will watch the video and take it from there.

I actually saw Marlan Scully give a seminar on his delayed choice quantum eraser experiment.

…

and just like all QM entanglement phenomenon, it sounds exciting.

.

It sounds like you do a double slit but record which slit, so you don’t get a pattern on the screen. Then you erase the data and magically the image on the screen is changed to an interference pattern, after it has already been recorded. Amazing!

.

But what really happens is you get two blurs on the screen , one from each slit, when you record which slit the photon went through. Then when you ‘erase’ the which slit data, you somehow get two different sets of data. Each of these sets corresponds to an interference pattern. However, the interference patterns are offset and their sum is equal to the two blurs you originally got.

.

At the end I asked, ” but clearly the image on the screen never changes”. He said, it’s just the way you analyze the data that changes.

.

I know I hand waived through, and the experiment is significantly more complex, but to me, it is not as exciting as it sounds. Unless I just don’t understand the exciting part. Everyone else seems very excited by it.

That’s interesting Doug. You know, the more I find out about this sort of thing, the more I learn that there is no substance to it. This is not good. Especially since the issue is not limited to quantum physics.

Hi Doug, interesting story and you had the right gut feeling. Indeed on the screen nothing changes, it is how the data is processed, and the reason they have these offset patterns (that add of course up to the REAL pattern on the screen) is because of the crystal that is used, it has something to do with the structure inside crystal. They wrongly attribute (and make it appear it is the same) this pattern to the typical double split result.

It is the same story as with the polarizers in Bell’s experiments, they don’t seem to understand that these physical elements in the optical path do effect the result. It seems they are blind to this…

What I also think happens is that the experimentalists probably know this, but keep it under wraps so both the theorists and the experimentalist can confirm each other and everybody is happy and can continue their tax payer funded work (and of course whoo the general public with this “magic”, and since they don’t understand it, the funding can go on endlessly…. They even have the guts to ask for a crazy amount of funding to build an even bigger collider, which will lead to no new understanding)

And the trouble right now is that people are getting a lot of funding for quantum information science. See this for example:

.

https://qubitreport.com/quantum-computing-business-and-industry/2021/08/06/375m-fund-marks-a-new-era-for-uk-tech-innovation-quantum-computing/

.

“The Qubit Report – Because Quantum is Coming, £375M Fund Marks a New Era for UK Tech Innovation, Quantum Computing, August 6, 2021”

.

https://www.reuters.com/article/us-usa-quantum-funding-idUSKBN25M0Y9

.

“The U.S. Department of Energy on Wednesday said it will provide \$ 625 million over the next five years for five newly formed quantum information research hubs as it tries to keep ahead of competing nations like China on the emerging technology. The funding is part of $1.2 billion earmarked in the National Quantum Initiative Act in 2018.”

.

PS: if you want to put a dollar symbol in the comments, put a backslash \ in front of it.

The answer to all this Bullshito over spending on cubits is obvious John, all of us need to form a shell company together and start applying for grants !

And because of my brilliantly smashing expertise in Bullshito, is exactly why I should be put in charge of the marketing department.

What do you think Dr. Duffield ?

Grants are not for the likes of you and me, Greg. Only academic charlatans are permitted to live a life of ease on the public purse… for standing four-square in the way of scientific progress.

Hey I tried to post a comment, and was denied. I’ll try again.

Hey John,

I´m glad you´ve been posting more frequently. I always enjoy them.

I asked you last month if you’ve read Thomas Kuhn’s works. I’ve been recently interested in the sociology of science, and I’ve been reading as much on the matter as I can. Something important I’ve taken away from this, is an understanding that science in reality is very much a sociological process. As much as we’d love to believe that science is a forum in which the best ideas rise to the top, and everyone argues for theories based on evidence and simplicity, understandability and reasonableness, and all the things we think would be obvious to judge theories on, it really seems like science is driven by many other human tendencies: Pride and charisma and popularity and such all play into theory making. Any theory outside of Orthodox is up against greater scrutiny and are held to much greater standards.

I don’t know if you have yet developed a concise, cohesive model, but with this blog you hint towards foundations you believe to be important. These all seem like very rational foundations. You seem to imply that new foundations would do away with the current mysticism found in quantum mechanics. You argue that quantum and particle physics are foundationally flawed. The new foundations for a new theory that could fix the current flawed theories in physics would supplant foundations that sit beneath behemoths of theory.

What I’m trying to say is that you’re up against a pretty huge challenge. For the physics community today to throw away a theory that has had so much development, in terms of money and man-hours spent on it, and the mountains of publications, the bar is set incredibly high. The new replacement theory would probably have to derive nearly all results and explain nearly all paradigmatic experiments in current physics. I personally can’t imagine myself, or any single person creating de novo such a comprehensive corpus of work.

I understand that none of the work that went into the creation of the current theories of modern physics came close to the rigor and comprehensiveness that would be required today. This doesn’t seem fair, but I think it actually does make sense from a sociological perspective. The beginning of quantum mechanics was ridden with vague, poorly written publications, but, to understand why this was acceptable at the time, we need to realize that quantum mechanics emerged at a very particular time, during which the theory and the practice were evolving together. This means that to be accepted into the theory making, one had to only find a way to explain one result, since there were so many new, and altogether unexplained results. Nothing had to supplant old, established theories. Maybe in many cases, old established philosophies had to be supplanted or modified, but those, the scientific community tends to forsake much easier than explanations of practical results. Today, any work on fixing quantum mechanics or particle physics would involve not explaining new results, tearing away foundations, and rebuilding. This is a project very few people within the community are willing to work on or fund. This is a project that I think would only be accepted if, in one swift motion, the old foundations could be sufficiently torn down, and replaced leaving all, or most, current results left standing. I think that this is a project that simply could not succeed in piecemeal.

I guess this is all prelude to ask, what is your intent with this blog? To spark curiosity and prove to your readers that questioning the scientific orthodoxy is not only reasonable, but in this case well justified?

Do you have any aspiration to head a project to attempt to convince the scientific community as a whole that there exists a better theory?

To motivate others to go into science, and bring rationality into their field in whatever incremental ways they can?

I’m also curious how much you know about Steven Wolfram’s project on a foundational theory? There was a very interesting time during the early stages of the pandemic where a bunch of scientists took time to pursue their own foundational theories. Steven Wolfram and Eric Weinstein are two of the more prominent examples. I find this time to be really interesting. While neither project was entirely successful, I think this period of time shows something really interesting about the scientific community. It seems like many people harbor some feeling that there needs to be a foundational change, but it’s only worth their time to pursue when they have the free time to play around. Something else interesting I found was that the Lone Wolf approach that Weinstein took was entirely untenable. Not necessarily that his theory was bad (it was), but he was entirely unable to convince anyone else that his theory was worth anything, He couldn’t explain it, he couldn’t back it up, and it derive specific results. Wolfram’s approach was very different. He started an open source community, guided by a few foundational principles, and set the power of hobbyists to work. Personally I think Wolfram’s project will come to be seen similar to string theory: certainly interesting for the tools it develops, but definitely irrelevant to reality. Regardless he was highly successful in showing that (within a type of toy universe) there seems to be some inevitability to the structures of relativity and quantum mechanics.

Anyways, rambling aside, do you see any value in attempting a similar project to Wolframs? It seems you could maybe structure a program to probe your ideas’ foundations. Create some sort of basic assumptions (I’m not entirely sure what those would be, maybe that the electron is geometrical, measurement involves some frequency transform, things like that) and outline goals for derivations.

I’d love to hear your thoughts, and thanks again for all the thought provoking posts. It’s all much appreciated.

For some reason I’m unable to post. I’ll try again.

Hi Eric. Apologies for the trouble you’ve had posting. The Askimet antispam can be a pain in the arse. Email me at johnduffield at btconnect dot com for anything that’s gone adrift, and I will put it up here. As for my aims, I’m just a guy who wants to see some advancement in physics, and thence the world. As to whether this website actually helps with that, well, I hope so. When it comes to addressing all the points in your post, it’s big job, so l will go through it and get back to you. Meanwhile the moot point is this: I hope I can shift things along a smidge. Maybe I can, and maybe I can’t, but either way, I think it’s worth trying.

Hi Eric, blogs like these where history of theory development, pointing out faults-inconsistencies-misinterpretations, and providing alternative solutions (which I do agree will acquire a lot more exploration, however can be inspiritation for future generations) are very rare. I think the comment filter is a good thing. Not to have this as a echo chamber but to provide a meaningfull discussion (based on fact, logic and understanding), other public forums suffer from this…

What John is pointing out is that the current theories are flawed and full of beliefs and assumptions (actually for me it is now very similar to organised religion…), that keep the majority stuck in a certain thinking pattern. The reason I am following the discussion closely now is the whole particle aspect is key to overtrow by something new. I do think string theory was onto something good (because everything in essence is a vibration) but was overly complicated, untestable and so on. What the new theory needs to do is explain why an electron, proton, neutron (and al other flavors that are detected in collusions) have a certain energy content, calculate the fine structure constant… many other things that are now not explained at all by the current model. And also take away the mysteries and magic that are currently involved (the whole dark energy-matter, big bang inflation,… shenanigans are good examples). The truth will always prevail and I do believe this blog has its impact and I am gratefull for all the time John puts into it.

Sorry to be slow replying, Eric, you’re in italics, I’m not.

.

I´m glad you´ve been posting more frequently. I always enjoy them.

.

Thanks. I try to do one a month, but I work for a living, and the day job has been tough of late.

.

I asked you last month if you’ve read Thomas Kuhn’s works. I’ve been recently interested in the sociology of science, and I’ve been reading as much on the matter as I can. Something important I’ve taken away from this, is an understanding that science in reality is very much a sociological process. As much as we’d love to believe that science is a forum in which the best ideas rise to the top, and everyone argues for theories based on evidence and simplicity, understandability and reasonableness, and all the things we think would be obvious to judge theories on, it really seems like science is driven by many other human tendencies: Pride and charisma and popularity and such all play into theory making. Any theory outside of Orthodox is up against greater scrutiny and are held to much greater standards.

.

I’m sure science is a sociological process. However I think for physics, it’s more than just that. So much more that the field is up to its neck in corruption and dishonesty. I look at quantum entanglement, and it’s out-and-out scientific fraud, with bile directed at people who challenge the orthodoxy. I think particle physics is also troubled, with Goebbelesque lies-to-children, and the total censorship of electron papers et cetera. Meanwhile gravitational physics flatly contradicts Einstein, and if you try to point that out, you will get banned from every physics forum on the planet. Write a paper saying “the speed of light is spatially variable” and it will never see the light of day. Instead what will, is multiverse moonshine and holographic pseudoscience. The more I learn, the more I think that the situation is absolutely appalling.

.

I don’t know if you have yet developed a concise, cohesive model

.

Just an outline. See https://physicsdetective.com/the-theory-of-everything/ . It’s basically William Kingdon Clifford’s 1870 space theory of matter.

.

but with this blog you hint towards foundations you believe to be important. These all seem like very rational foundations. You seem to imply that new foundations would do away with the current mysticism found in quantum mechanics. You argue that quantum and particle physics are foundationally flawed. The new foundations for a new theory that could fix the current flawed theories in physics would supplant foundations that sit beneath behemoths of theory.

.

It isn’t quite like that. Note my strapline, courtesy of Bert Schroer: “Perhaps the past, if looked upon with care and hindsight, may teach us where we possibly took a wrong turn”. Those new foundations you’re referring to, are old foundations, and I didn’t come up with them. The foundations of quantum physics were laid down by the “realists” like de Broglie, Schrodinger, Darwin, and so on. The foundations of gravitational physics were laid down by Einstein and others. All these foundations are still there, but the behemoths you mention are not built on them.

.

What I’m trying to say is that you’re up against a pretty huge challenge. For the physics community today to throw away a theory that has had so much development, in terms of money and man-hours spent on it, and the mountains of publications, the bar is set incredibly high. The new replacement theory would probably have to derive nearly all results and explain nearly all paradigmatic experiments in current physics. I personally can’t imagine myself, or any single person creating de novo such a comprehensive corpus of work.

.

I’m just doing my bit. Just spread the word Eric. There’s plenty of young physicists who can use this stuff to good advantage.

.

I understand that none of the work that went into the creation of the current theories of modern physics came close to the rigor and comprehensiveness that would be required today. This doesn’t seem fair, but I think it actually does make sense from a sociological perspective. The beginning of quantum mechanics was ridden with vague, poorly written publications, but, to understand why this was acceptable at the time, we need to realize that quantum mechanics emerged at a very particular time, during which the theory and the practice were evolving together.

.

I’m afraid I don’t agree, Eric. There are some great papers written in the 1920s and 1930s. However history is written by the victors. And in quantum mechanics, the victors were the Copenhagen school, who insisted that quantum mechanics surpasseth all human understanding. They even stole Schrodinger’s cat and used it to promote quantum weirdness. Start reading the articles here: https://physicsdetective.com/articles/historical-articles/. Let me know if links to papers no longer work, and I will fix them. (I have two issues with the old papers: 1) link rot and 2) Elsevier etc playing whack-a-mole with Sci-Hub).

.

This means that to be accepted into the theory making, one had to only find a way to explain one result, since there were so many new, and altogether unexplained results. Nothing had to supplant old, established theories. Maybe in many cases, old established philosophies had to be supplanted or modified, but those, the scientific community tends to forsake much easier than explanations of practical results. Today, any work on fixing quantum mechanics or particle physics would involve not explaining new results, tearing away foundations, and rebuilding. This is a project very few people within the community are willing to work on or fund.

.

I fear that if they don’t, they will, in the end, be thrown to the wolves. Then there will be no funding, and no community.

.

This is a project that I think would only be accepted if, in one swift motion, the old foundations could be sufficiently torn down, and replaced leaving all, or most, current results left standing. I think that this is a project that simply could not succeed in piecemeal.

.

I certainly think there’s a problem. For example particle physics has dug itself into a hole with The Standard Model. How can anybody at CERN ever admit that the Higgs mechanism flatly contradicts E=mc², wherein the mass of a body is a measure of its energy content? They can’t. They’ve painted themselves into a corner with particle “discoveries”, and they can’t get out. One day I will look into that, and plot an escape route.

.

I guess this is all prelude to ask, what is your intent with this blog? To spark curiosity and prove to your readers that questioning the scientific orthodoxy is not only reasonable, but in this case well justified?

.

I’m just doing my bit to try to make the world a better place. See “the future isn’t what it used to be” for more: https://physicsdetective.com/future/

.

Do you have any aspiration to head a project to attempt to convince the scientific community as a whole that there exists a better theory?

.

No. Well, I suppose you could say I’m already heading a project. A project of one.

.

To motivate others to go into science, and bring rationality into their field in whatever incremental ways they can?

.

Yes.

.

I’m also curious how much you know about Steven Wolfram’s project on a foundational theory? There was a very interesting time during the early stages of the pandemic where a bunch of scientists took time to pursue their own foundational theories. Steven Wolfram and Eric Weinstein are two of the more prominent examples.

.

I took a look at it, and thought there were no foundations present.

.

I find this time to be really interesting. While neither project was entirely successful, I think this period of time shows something really interesting about the scientific community. It seems like many people harbor some feeling that there needs to be a foundational change, but it’s only worth their time to pursue when they have the free time to play around. Something else interesting I found was that the Lone Wolf approach that Weinstein took was entirely untenable. Not necessarily that his theory was bad (it was), but he was entirely unable to convince anyone else that his theory was worth anything, He couldn’t explain it, he couldn’t back it up, and it derive specific results.

.

As above.

.

Wolfram’s approach was very different. He started an open source community, guided by a few foundational principles, and set the power of hobbyists to work. Personally I think Wolfram’s project will come to be seen similar to string theory: certainly interesting for the tools it develops, but definitely irrelevant to reality. Regardless he was highly successful in showing that (within a type of toy universe) there seems to be some inevitability to the structures of relativity and quantum mechanics.

.

Noted.

.

Anyways, rambling aside, do you see any value in attempting a similar project to Wolframs? It seems you could maybe structure a program to probe your ideas’ foundations. Create some sort of basic assumptions (I’m not entirely sure what those would be, maybe that the electron is geometrical, measurement involves some frequency transform, things like that) and outline goals for derivations.

.

A lot of the ideas you read on this blog are ideas I’ve dug up by reading other people’s papers. There is some original content by me, but not so much. For example, the electron model is from Williamson and van der Mark’s 1996 paper Is the electron a photon with toroidal topology? See https://5p277b.n3cdn1.secureserver.net/wp-content/uploads/electron.pdf

.

I’d love to hear your thoughts, and thanks again for all the thought provoking posts. It’s all much appreciated.

.

My pleasure Eric. It’s good to talk.

.

For some reason I’m unable to post. I’ll try again.

.

Sorry. Before I got Askimet, I was getting a hundred spam comments a day, some of them pretty awful. Now the spam filter can be over-zealous at times.

“and providing alternative solutions”

I have one, so do many others. There are so many, professors won’t even consider looking at any. Hence, getting anyone with solid knowledge to review your work is difficult. Fortunately, there is a lot of solid knowledge, much of it ready to let go the current baggage, in the hobbyist community.

You may find it interesting. The links (embedded in the post) and a diagram and equation sheets here:

physicsdiscussionforum. org/graphical-representation-of-the-refraction-steerin-t2529. html

I’m currently trying to take the step away from static gravity fields to interacting ones.

physicsdiscussionforum.org/fizeau-follows-fresnel-s-fractional-formula-favori-t2558.html

“(actually for me it is now very similar to organised religion…),”

This is a common criticism, including by me. Check out my second comment under my physics article.

“The truth will always prevail…”

That is the intent of the Scientific Method. Verify your hypotheses to eke out the truth. Where most people go wrong is to claim that Science has found the truth. Or that there is only one plausible model for anything.

I’ll take a look Cedron. Apologies though, I’ve been run of my feet of late, so it might be the weekend. The job is tough, and there ain’t enough hours in the day.

Hey John,

Thanks for the long thought-out reply. Like you, I’ve been busy of late. I’ll look it over more thoroughly once I have the time tonight.

No worries on the spam filter, that’s totally understandable.

Thanks for referencing my 2019 quote on Malus & Bell:

A Comparison of Bell’s Theorem and Malus’s Law: Action-at-a-Distance is not Required in Order to Explain Results of Bell’s Theorem Experiments by Austin J Fearnley who said “there is an enforceable duality between results of Malus’ Law experiments and the results from Bell experiments”.

I have written four more papers on vixra since then and all pertain to Malus and Bell and my ideas and models have continued to change but I still hold that Malus is crucial to the Bell experiment. I feel too old to write any more papers!

I came across your posts by chance today and am pleased that not too much time has elapsed since you posted.

I noticed a duality between Bell and Malus calculations by following Susskind’s online (theoretical minimum) course. He gave a simple example of the QM calculation. It became apparent to me only much later (my being a naive amateur) that he had only proved Malus rather than Bell as he measured at zero degrees and 45 degrees and his polarised pair were aligned with zero degrees and 180 degrees eg up&down polarisations. So he already had a pre-polarised beam in the zero degrees direction. That is, he had used the equivalent of a Malus polarising filter to start off the Bell calculation. I have noticed this in some wiki proofs also. (Though I know there is a also more complicated proof used by QM).

Later, I started playing with Malus’s Law for photons and for electrons [Io cos^2(theta/2)] for a concrete example. Again, after some time and very slow realisations, I thought that I had surely seen the Malus calculation before. Not a mundane calculation but the term 0.5*(0.5-0.5/sqrt2) rang a bell in my head as exactly the one used by Susskind for Bell.

Next, the bad news that I could not get 0.707 for a Bell experiment using Malus. In fact, using my newer gyroscopic model for electrons/photons I could only get r=0.375 ish. What you have posted about dynamic hidden variables also agrees with my gyroscopic model. The dynamic model of a particle motion throws out CFD. Why only r=0.375? Because the dynamic model is even worse (more fuzzy and with even more variation to attenuate the correlation below 0.5). (Not sure why the value is 0.375? I have not reported this value in a paper.)

Next, the good news (in my opinion at any rate) is that I get 0.707 for Bell if I use Malus with retrocausality thrown in. Retrocausality caused by antiparticles moving backwards in time.

Malus + retrocausality => Bell r=0.707

Retrocausality eschews the need, if there ever was one, for entanglement.

Gill has pointed me at useful papers on quantum cryptography and quantum computers. I am coming to the view that retrocausality can allow both to happen. But it happens, in my opinion, without entanglement but with the power of statistics to jointly manipulate unknown separate states in optical fibres.

Best

Austin Fearnley

aka ben6993

Austin: utmost apologies, your comment was in the spam folder. I’m sorry, but I can’t see why. If you have lots of hyperlinks it can end up there, or some dodgy “medical” words. It’s a shame your papers are on viXra rather than the arXiv, but not surprising. I will have a look. For now I have to say I’m not fond of the retrocausality I’m afraid. I take a very realist plain-vanilla stance when it comes to my thoughts on physics, which, it would seem, is in essence William Kingdon Clifford’s Space Theory of Matter. I plan to write about the delayed choice quantum eraser at some point. Meanwhile see https://physicsdetective.com/the-electron/ for what might be some useful hyperlinks, including the Williamson / van der Mark paper that describes the electron as a photon in a closed path. So IMHO if Malus’s law explains the experimental results for light, it will do the same for electrons and positrons, especially since the photon is a time-reversed electron. That’s what the second image in the above article is attempting to portray. Both the electron and the positron are light in a spin ½ double-loop path with two orthogonal rotations, but the positron is “going round the other way”. I reversed the animated gif to go from one to the other.

John, no apology needed. I had a second try at reCAPTACHA after the first one timed out. Maybe that caused a problem.

I have become accustomed to vixra: 15 papers there. As I am not a physics professional, it causes me no offence or embarrassment. For me it is a quick and useful service to datestamp my ideas. And I can still read papers by professionals on arxiv. I also do not think my papers would look good on arxiv. I would need to do a Gauss on my papers by removing the scaffolding to make them more professional and obscure enough to look right in that setting. Quite the opposite of what I want.

I followed your link on the electron, and Williamson, and realised that I have very recently seen a video conference this month by Vivian Robinson on the work of Williamson and van der Mark

https://www.youtube.com/watch?v=pMohlK94120

So that is very new for me. My own ideas on electron and photon structure are in my papers on my preon model where I construct all elementary particles from four preons.

Just throwing in some retrocausal speculations about moibus closures… Joy Christian uses an S^3 model with his old analogy of skaters dancing in the reverse direction on the far side of the moibus strip. He met some opposition by claims that he was illegitimately using εijk=1 and εijk=-1 within the same clifford calculation which ought only to use one trivector setting.

I am looking at this differently by letting the dancers keep their spatial metrics but reversing their time metrics with the same overall effect. This could be a time restraint constricting a particle in this loop. And if the loop is broken you could release two particles, one with a +ve time direction and one with a negative time direction. Probably into dS and AdS spaces coexisting alongside one another like rationals and irrationals coexisting on the real line. G ‘t Hooft has an interesting idea online about a particle passing through a Black Hole instantly and coming out on the far side with an opposite time direction. I speculate that the particle experienced time going into the BH but experiences an anti direction of time coming out. Times cancel, appearing to outside observer to have no total time elapsed. So I would says that BHs do have interiors w=but maybe have pposite time directions on opposite sides. Very far fetched.

In my retro model for Bell, the observer of the antiparticle measurement is using that measurement as a polarising filter for a Malus experiment. The other observer measures the partner particles in a second measurement which is the analyser filter. So it is pure Malus experiment in practice, not Bell. Retro action means that the only particle polarisations within the normal flight paths of the Bell experiment are +a, -a, +b and -b. This again is a pure Malus setup and allows r=0.707. Without retro, the particle polarisations take all random values, and this limits r to 0.5. Removing retro removes the action of the polarising filter. Malus is dual in my mind to Bell, but without retro I cannot think how to make it provide r=0.707.

Austin

Austin: noted re the papers. I will take a look at them sometime. And thanks re the Vivian Robinson video: https://www.youtube.com/watch?v=pMohlK94120 . I know Vivian, he was in a “Nature of Light and Particles” discussion group I was in. I bowed out because I just didn’t have enough free time.

.

Noted re the preons. I’m not fond of them I’m afraid: https://en.wikipedia.org/wiki/Preon. I very much dislike point particles. Back in the 1920s Schrödinger and other “realists” talked about the wave nature of the electron, and described it as a wave in a closed path. But IMHO the Copenhagen school adopted Frenkel’s point-particle electron to spite the competition, and it was all downhill from there.

.

I have no issue with skaters dancing in the reverse direction on the far side of Mobius strip. Or with reversing their time metric. That’s essentially what I did when I reversed the animation in the second image in the above post. The thing on the left depicts an electron, the thing on the right depicts a positron. The positron is a “time reversed electron”. But it isn’t travelling backwards through time. The energy flow moves through space. It’s going backwards when compared to the electron. But that energy flow is in essence a photon regardless of which way it’s going. Hence when you annihilate the electron with the positron, you usually get two photons. That’s all. There’s no evidence of any particles going back in time, and no evidence of Anti-de-Sitter space either. I looked into the history of that sort of thing in https://physicsdetective.com/a-compressed-prehistory-of-dark-energy/. As for ‘t Hooft’s idea of a particle passing through a black hole and coming out on the far side with an opposite time direction, I’m afraid I take a similar view. I take note of Einstein saying a gravitational field was a place where the speed of light is spatially variable. This is backed up by the hard scientific evidence of NIST optical clocks, which go slower when they’re lower. There is no actual thing called time being measured by such a clock. It goes slower when it’s lower because light goe slower when it’s lower. I would urge you to read https://physicsdetective.com/articles/articles-on-gravity-and-cosmology/ to avoid spending time on fruitless speculative avenues.

.

I don’t see any issue with the polarising filters giving a Malus Law result. When you have light going from left-to-right through two polarizing filters, the first filter A means you then have polarized light going into the second filter B. In the Clauser & Freedman experiment, the calcium cascade emitted polarized photons in opposite directions. One photon goes through filter A, the other goes through filter B. The first photon is just a time-reversed version of the light that went through the first filter.

.

Apologies, I have to go. I will look at your other comment when I can.

Now that I have lost belief in entanglement I see the space metric knitted from disjoint regions of εijk=1 space and εijk=-1 space.

.

But there’s no experimental support for that, Austin. I am now very sceptical of speculations which have no support.

.

antiparticles are travelling backwards in thermodynamic time.

.

I’m sorry Austin, but when you think some more about time, I am sure you will no longer believe that there’s any kind of travel through it. See https://physicsdetective.com/the-nature-of-time/

.

Also, which you will not like, is that εijk=1 and εijk=-1 space regions may need to be connected by a Rosen-Einstein bridge for two such regions to interact.

.

I don’t like it. It’s just a fantasy. I’ve referred to the Einstein-Rosen paper in the article on time I mentioned above.

.

So I am not averse to the idea of microscopic black holes on a chip. But if they exist, then they are ten-a-penny and not worth any ridiculous hype.

.

I am, because most of what you read about black holes is ridiculous hype.

.

CCC leads me to Penrose having only photons left over at a CCC node. That might fit in with photons being integral to electrons as in the Williamson et al model.

.

The important point is that Williamson and van der Mark were not the first people to come up with the idea of a wave in a closed path. Louis de Broglie had a brief paper ( https://sci-hub.tw/10.1038/112540a0 ) published in Nature in 1923. That’s where he said “the wave is tuned with the length of the closed path”. Check out the Wikipedia article on the toroidal ring model: https://en.wikipedia.org/w/index.php?title=Toroidal_ring_model.

.

But it also leads me to think that pure energy is also being over-hyped or over-used. One cannot in my opinion obtain matter from pure energy.

.

I’m afraid that’s what E=mc² is all about Austin. A photon is pure energy, because it’s a wave. Take all the energy out of a wave, and it no longer exists. But if you don’t, you can make matter out of it.

.

My preon model constructs all fundamental particles using just four preons…

.

I’m sorry Austin, I just can’t empathise with your preons. Or with gluons. Or the Higgs boson, or the W or Z boson, or weak isospin. If you read more of the articles here, you will appreciate that this is not some casual decision.

I have a few ideas which, because they do not conform to standard theory, may possibly be relevant themes for this site?

Starting with entanglement, which we already considered.

When I retired and took to learning physics again, after a 38 year break, I came to praise Caesar not to bury him. I even had my own idea from Rasch measurement theory that entanglement could be responsible for creating the space metric. And I still fully support (I have a vixra paper on this) the CCC model where the metric of space breaks down at a CCC node. Now that I have lost belief in entanglement I see the space metric knitted from disjoint regions of εijk=1 space and εijk=-1 space. Where these trivectors should not coexist locally and yet they must do for me to claim that antiparticles are travelling backwards in thermodynamic time. I also believe that these εijk=1 space and εijk=-1 space regions underpin current experimental results for Bell, quantum computing and quantum cryptography.

Also, which you will not like, is that εijk=1 and εijk=-1 space regions may need to be connected by a Rosen-Einstein bridge for two such regions to interact. So I am not averse to the idea of microscopic black holes on a chip. But if they exist, then they are ten-a-penny and not worth any ridiculous hype.

CCC leads me to Penrose having only photons left over at a CCC node. That might fit in with photons being integral to electrons as in the Williamson et al model (though not in my own preon model). Or even that everything is made from photons. But it also leads me to think that pure energy is also being over-hyped or over-used. One cannot in my opinion obtain matter from pure energy. I am against just getting any old types of matter from an input of a given quantity of energy. At a CCC node the universe has no metric and therefore can reset at a spatial point. But the CCC node universe is not made of pure energy but instead is made of photons. So that leads me to my preon model. [Note, admission that I rather like BECs and am unsure where they sit with respect to entanglement. The CCC metric loss however arises because of loss of fermions not because of BEC entanglement.]

My preon model constructs all fundamental particles using just four preons (with details in my vixra papers). I have modeled many interactions and all interactions preserve preon content. Count the preons into the interactions and they are all counted out again after the interaction. I used known decay paths set out in the particle bible. It was always possible to construct the decay paths using preons. If pure energy was sufficient, there should be no need to list a range of decay paths as anything could happen. I also was able to model leptoquark structures in terms of preons using the principle of conservation of preons in interactions.

To cope with constructing fundamental particles, especially the gluons, from preons I had to cope with superposition which is the only-slightly less-evil sister of entanglement. And equally non-existent. I coped with superposition by manipulating the three particle generations and increasing the numbers of preons per generation. That made sense as higher generations of fermions are the heavier particles, so maybe more preons are in them. This led me to making three generations of bosons: 1st photon; 2nd Z; and 3rd gluons (and higgs). If a gluon can enable interaction 1 OR interaction 2 then that gluon’s contents can be such that taking preons to enable 1 does not leave enough left over to carry out interaction 2. But if another gluon had more of the necessary preons then it would have enough to carry out BOTH interactions together. So one can build up supposed superpositions by design of gluon structure.

Another problem is that one can use field theory to explain all interactions but one cannot do this with a particle theory. So how did I manage with my preons model which is a particle model. The problem lies with weak isospin which is not conserved in interactions, not even when an electron emits a photon. Note that weak isospin (+1/2 or -1/2) is the only quantum property of the higgs field.

To make a particle model that coped with all interactions I had to create 1/4-higgs in generation 1 and 1/2-higgs in generation 2. The normal higgs is generation 3. So the normal higgs has four times as many preons as the 1/4 higgs. I assume that the 1/4-higgs is very light in mass as it is involved with an electron emitting a photon. The preons in the higgs supply the preons for the emission of the photon rather any particle being made from pure energy.

Another pet hate is supposedly superposed particle-antiparticles eg https://www.ox.ac.uk/news/2021-06-08-subatomic-particle-seen-changing-antiparticle-and-back-first-time

All such supposed morphs can be explained with exchanges of preons as in a normal interaction. But if I eschew superposition then how do we explain interference effects? My electron has four preons but also has 96 hexarks (turtles all the way down) and it is really ‘any integer n’ times 96. So plenty of scope there for interference effects.

I am just going through Mr Tomkins magical world, quickly, and I do not accept that a particle tunnels through an impossible hurdle without changing its form first in an interaction. And then back again. [Mr Tomkins is surprised to find his car outside the garage.] Is there anything left …

Austin

Great post, John ! Txs for commenting on my post and getting in touch again. I am swamped by other work, but you seem to do great bringing some sense to the ‘physics’ scene !

Merci Jean, et moi aussi. I am trying to do my bit. Somebody asked me to do an article on quantum entanglement, and I was surprised to find out that it’s just Malus’s Law. There is no substance to it.

I need to write up more properly in a paper my negative attitude to superposition with respect to gluon structure in my preon model, and also neutrino oscillation due to preon exchanges at interactions. And more generally anti the idea of particle and antiparticle inter-morphing as though it were somehow mystical. When I get the energy to do so.

I don’t think there’s anything wrong with superposition, Austin. Let’s not forget that we have hard scientific evidence for the wave nature of matter. But as for gluons and preons, I’m afraid to say there is no actual evidence for their existence. Despite what people say about gluon jets, nobody has ever seen a free gluon, and the gluons in ordinary hadrons are virtual. Nobody has ever seen particles morphing into antiparticles either. I would encourage you to spend your energy on other matters. Whilst you’re thinking about that, you might like to take a look at https://physicsdetective.com/what-the-proton-is-not/.

.

PS: I’m sorry to have been slow replying to your others posts. I’ve had to do various things this week. I will take a look now.

Detective: thanks for pointing out the issues with entanglement. I think there either needs to be a description of how you can produce the results in the Bell tests without spooky action or nonlocality or there really is something spooky like entanglement going on,…. Or it really is as simple as just a polarizer, as you suggest.

.

I think Sandra is saying it’s not as easy as saying it’s just the same as a polaizer, and idk if it really matters how the entangled photons were produced in the older papers, there have been more recent experiments with the detectors over a km apart and the results still cannot be explained without entanglement, as far as I can tell…. Unless it really is just the same as a simple polarizer, but I think there’s more to it. However, when you pointed out the similarity it made me a lot more comfortable questioning entanglement and I feel like these questions are unwelcome in some physics communities, like…. it’s been proven already, stop questioning!

.

Personally, I don’t like entanglement, spooky action, nonlocal or faster than light effects, and the curve is similar to polarization, but idk if it’s as easy as saying they are wrong, its just a polarizer…. I think you are pointing us to something bigger.

.

The next step would be a proof of this, and Sandra seems to believe this is impossible. If you’re good at coding it might be possible to code up a bell experiment with Mallus’ law and see if the results are the same as the Bell tests, then it would be obvious there’s nothing spooky.

.

At least that’s my two cents, I’m in two minds about it, and it’s as clear as mud right now for me. I’ll try to be more humble in my lack of understanding.

It’s a castle in the air, Doug. A myth that has grown over the years. Plenty of people will tell you how magical and mysterious entanglement is. But nobody will tell you that Clauser and Freedman’s photon pairs weren’t even entangled. Or Aspect’s. Zeilinger’s were, but see Analyzer Output Correlations Compared for Entangled or Non-entangled Photons by Gary Gordon. He said “Our predicted results for this non-entangled photon case are an exact match to those reported in the literature for the analysis and experimental outcomes for the entangled photon case”. That’s your next step, it’s already been done. But none of those authors I listed at the end of the article never get any air time. It’s just a polarizer isn’t going to feature in Nature any time soon. They won’t print any electron papers either.

When I get some free time i’ll code up a simulator where you can stick in any equation you want for the off axis measurements, cos^2, linear, whatever. But I have a feeling Bell’s theorem is famous for a reason and I will always just get back the classical line unless I include entanglement. We will see.

.

Crazy papers get published all the time, I assume there would be a lot more papers talking about this if it was all baloney.

.

Looking forward to making the plots myself.

Yes, crazy papers get published all the time, like the Wormhole publicity stunt, see Woit and Nature. But if you were to write a non-crazy paper explaining why, after considered research, you thought quantum entanglement was merely Malus’s law in action, your paper would never see the light of day. Do not underestimated the strength of vested interest.

Doug: Let’s forget polarizers for a second. What we need is some property, or combinations of properties, which explains our experimental results. Our tests show that whenever we measure our entangled particles (electron spin, photon polarization, you name it) WITH THE SAME measurement type (i.e. same angle, or same spin orientation) we ALWAYS get the same result for both particles, no matter how far apart they are (So if we are talking photons, we’ll measure same polarization, if we are talking electrons we’ll measure opposite spin).

If we instead choose 2 different measurement types for each partner particle, our result will not be the same everytime, but will depend on the relationship between those two measurements. Since for both electron spin and photon polarization the difference in our apparatuses is a certain ANGLE, the correlations between partners will depend on that angle. It turns out, it is a cos^2 dependence.

Now the big problem: how can the particles tell what setting the two apparatuses are when they are created? How can they tell “we’re going to give the same result, because both measurements are the exact same” from “the measurements are different, let’s follow a cosine rule!” before they reach their destination?

You have two options. 1) The particles don’t know anything in advance, but communicate to their partner what their measurement is a the moment of detection. This clearly implies FTL.

2) The particles agree to follow a pre-determined plan, so that they can be consistent when they finally know how they will be measured. Let’s expand 2) a bit further, taking back polarizers into consideration (just for simplicity of argument, but it can be ANY measurement type).