Check out something is rotten in the state of QED. It’s a paper by Oliver Consa, who has done some excellent detective work on the history of quantum electrodynamics (QED). He has delved deep into the claims that QED is the most precise theory ever, and what he’s come up with is grim:

Consa says the much-touted precision of QED is based on measurements of the electron g-factor, but that “this value was obtained using illegitimate mathematical traps, manipulations and tricks”. I think he’s right. I think he’s discovered where the bodies are buried. I think his paper is forensic physics at its finest.

The devil’s seed had been planted

Consa sets the scene by telling how after World War II, American physicists were regarded as heroes who could do no wrong. Such physicists were no longer harmless intellectuals, they were now “the powerful holders of the secrets of the atomic bomb”. Consa says the former members of the Manhattan Project took control of universities, and enjoyed generous state funding and unlimited credibility. He also says “the devil’s seed had been planted”. That’s because their hypotheses were automatically accepted, even when it concerned quantum field theory (QFT). This had been regarded as a complete failure in the 1930s because of the problem of infinities. However the physicists swept it under the rug using “renormalization”. Consa gives an analogy wherein Indian mathematician Srinivasa Ramanujan has claimed that the sum of all positive integers is not infinite, but is instead -1/12. It’s wrong, it’s absurd, but renormalization has now been accepted, and is even sold as a virtue.

Meaningless explanations

Consa rightfully points out that it comes with a long list of meaningless explanations such as the polarization of the quantum vacuum, electrons and photons interacting with their own electromagnetic fields, particles travelling back in time, the emission and reception of virtual photons, and the continuous creation and destruction of electron-positron pairs in a quantum vacuum. I share his sentiments. It pains me that people believe this garbage. See Karen Markov’s quora answer. It’s lies to children, and pseudoscience junk.

Experimental results contradicted the Dirac equation

Consa’s paper isn’t. He tells us about the 1947 Shelter Island conference, where experimental results contradicted the Dirac equation. Questioning the validity of the Dirac equation meant questioning the validity of quantum mechanics. Questioning the validity of quantum mechanics meant questioning the legitimacy of the Manhattan Project heroes. Consa tells us how the conference attendees “devised a compromise solution to manage this dilemma by defining QED as the renormalized perturbation theory of the electromagnetic quantum vacuum”. But for this to work, “it was necessary to use the QED equations to calculate the experimental values of the Lamb shift and the g-factor”.

Bethe’s fudge factor

He then tells us about Bethe’s fudge factor. On the train home from the conference Hans Bethe famously came up with the Lamb shift equation, obtaining a result of 1040 MHz. His paper was on the electromagnetic shift of energy levels. Consa tells us that the values in Bethe’s equation were known physical constants, except for the value of 17.8 Ry. He also says “the origin of this value is unknown, but it is essential to obtain the desired result”, and that “Bethe’s fantastic calculation is based on data that was calculated later, data that Bethe could not have known on his train journey”. The bottom line is that it’s “a value that was entered ad hoc to match the theoretical value with the experimental value”. Now that is fighting talk. And there’s more of the same.

Schwinger’s numerology

Consa then tells us that a few months later Julian Schwinger devised an even more epic calculation to yield the g-factor of the electron. Check out the Wikipedia article on the anomalous magnetic dipole moment. Consa says Schwinger’s one-page paper was on quantum-electrodynamics and the magnetic moment of the electron. This is the paper where Schwinger said “the detailed application of the theory shows that the radiative correction corresponds to an additional magnetic moment associated with the electron spin of magnitude δμ/μ = (½π)e²/ħc = 0.001162. It is indeed gratifying that recently acquired experimental data confirm this prediction”. Schwinger said prediction even though it was obviously a postdiction. A retrofit. Consa points out that Schwinger didn’t explain how the value had been obtained, and suspects “that Schwinger did not publish the theory because he had no theory”. The suspicion is that all he had was numerology, which is why he failed miserably at the 1948 Pocono conference. As did Feynman. Then came Tomonaga muddying the waters further. But then came Freeman Dyson to the rescue with his paper on the radiation theories of Tomonaga, Schwinger, and Feynman:

Consa tells us that Dyson said that the Heisenberg S-matrix could be used to calculate the electron’s g-factor, transforming it into the Dyson series. It was an infinite series of powers of alpha, where each coefficient could be calculated by solving a certain number of Feynman diagrams. Consa also tells us that enthusiasm returned to the American scientific community, but that some were critical. Like Paul Dirac, who said “How then do they manage with these incorrect equations? These equations lead to infinities when one tries to solve them; these infinities ought not to be there”. And Robert Oppenheimer, who thought “that this quantum electrodynamics of Schwinger and Feynman was just another misguided attempt to patch up old ideas with fancy mathematics”. Another critic was Enrico Fermi who said this: “There are two ways of doing calculations in theoretical physics. One way, and this is the way I prefer, is to have a clear physical picture of the process you are calculating. The other way is to have a precise and self-consistent mathematical formalism. You have neither”. Well said Enrico.

More fudge factors

Consa then describes how in 1950, Bethe published a new calculation of the Lamb shift that adjusted his fudge factor from 17.8 Ry to 16.646 Ry. And that “other researchers, such as Kroll, Feynman, French and Weisskopf, expanded Bethe’s original equation with new fudge factors, resulting in a value of 1052 MHz”. He says this strategy of adding new factors “of diverse origin with the objective of matching the theoretical and experimental values has been widely used in QED”. The strategy is known as perturbation theory.

Kroll and Karplus deliver a theoretical result that was identical to the experimental result

Consa then talks about John Gardner and Edward Purcell, who obtained a new experimental result which yielded an electron magnetic moment of μs=(1.001146±0.000012)μ0. That was in their 1949 paper A Precise Determination of the Proton Magnetic Moment in Bohr Magnetons. That meant Schwinger’s result was no longer considered accurate. But that didn’t matter to Feynman, because according to Dyson’s QED reformulation “Schwinger’s factor was only the first coefficient of the Dyson series”. The calculation of the next factor in the series was performed by Norman Kroll and Robert Karplus, two of Feynman’s assistants. Their 1950 paper was Fourth-Order Corrections in Quantum Electrodynamics and the Magnetic Moment of the Electron. They came up with a new theoretical value of 1.001147. It was almost identical to the Gardner and Purcell’s experimental result. Now take a look at footnote 23 at the bottom of page 13:

Kroll and Karplus said the calculations had been performed independently by two teams of mathematicians who had obtained the same result. Consa says it wasn’t possible “to imagine that a theoretical result that was identical to the experimental result could have been achieved by chance”. And that “this was the definitive test. QED had triumphed”. He says logic had been renounced, rigorous mathematics had been dispensed with, but “there was nothing more to discuss. Feynman’s prestige dramatically increased, and he began to be mentioned as a candidate for the Nobel Prize”.

The QED calculations had matched the experimental data because they were manipulated

Consa goes on to tell us that Dyson had doubts, but that his claim that the series was divergent did not diminish QED’s credibility. Nor did a 1956 paper by Sidney Liebes and Peter Franken on the Magnetic Moment of the Proton in Bohr Magnetons. This provided a very different value of 1.001165. Hence the coefficient calculated by Kroll and Karplus was wrong. Hence QED must be incorrect. And “there was no explanation for why Kroll and Karplus’s calculation provided the exact expected experimental value when that value was incorrect. It was evident that the QED calculations had matched the experimental data because they were manipulated”. Consa also says “the creators of QED refused to accept defeat. QED could not be an incorrect theory because that placed them in an indefensible situation”. Ouch. So what happened next? Andreas Petermann wrote a paper in 1958 on the Fourth order magnetic moment of the electron. Consa says Petermann found an error in the Kroll and Karplus calculations, as did Charles Sommerfield in his 1957 paper on the Magnetic Dipole Moment of the Electron. Consa also say this: “Once again, two independent calculations provided the same theoretical value. Miraculously, QED had been saved. For the third time in 10 years, experimental data had contradicted theoretical calculations”. And “for the third time in 10 years, a theoretical correction had allowed the reconciliation of the theoretical data with the experimental data”. Wince.

Keeping Petermann’s theoretical value within the margin of error

But things were set to get worse. Consa tells us how a research team from the University of Michigan performed a new experiment in 1961. The relevant paper was Measurement of the g factor of free, high-energy electrons by Arthur Schupp, Robert Pidd, and Horace Crane. They said g is 2(1+a) and a=0.0011609 ±0.0000024. Consa says the experiment was revolutionary in its precision, but “the authors were cautious with their results, presenting large margins of error”. Consa says in doing this they were keeping Petermann’s theoretical value within the margin of error to avoid “creating a new crisis in the development of QED”. Then in 1963 David Wilkinson and Horace Crane (again) came up with a new improved version of the experiment. This yielded a result which matched Petermann’s theoretical calculation. Their paper was Precision measurement of the g factor of the free electron. The experimental result was 0.001159622 ± 0.000000027, which was nearly the same as Petermann’s theoretical value.

A conscious manipulation of the experimental data

Consa says “this experimental result is incredibly suspicious. It was obtained after a simple improvement of the previous experiment, and it was conducted at the same university, with the same teams, only two years later. The margin of error could not have improved so much from one experiment to another, and it is extremely strange that all the measurements from the previous experiment were outside the range of the new experimental value. Even stranger, the theoretical value fit perfectly within the experimental value. Most disturbing, this value is not correct, as was demonstrated in later experiments”. He also says “it appears to be a conscious manipulation of the experimental data with the sole objective of, once again, saving QED”. And that “after this experiment, all doubts about QED were cleared, and, in 1965, Feynman, Schwinger and Tomonaga were awarded the Nobel Prize in physics”.

A temporary and jerry-built structure

Consa also says the cycle was repeated a fifth time and a sixth time. It sounds like whack-a-mole to me. Theoreticians come up with a calculation that exactly matches an experiment. Then a later experiment shows that the earlier experiment wasn’t quite correct. Then the theoreticians change their calculation to match the new experiment. And so on. Consa concludes with a Feynman quote: “We have found nothing wrong with the theory of quantum electrodynamics. It is, therefore, I would say, the jewel of physics – our proudest possession”. Only it isn’t. Consa also quotes Dyson from 2006: “As one of the inventors of QED, I remember that we thought of QED in 1949 as a temporary and jerry-built structure, with mathematical inconsistencies and renormalized infinities swept under the rug. We did not expect it to last more than 10 years before some more solidly built theory would replace it. Now, 57 years have gone by and that ramshackle structure still stands”. It still stands because it’s been propped up by scientific fraud. Here we are fourteen years later, and it’s still the same, and physics is still going nowhere. How much longer can this carry on? Not much longer, because now we have the internet. Yes Oliver, winter is coming. There may be trouble ahead.

You write “Consa gives an analogy wherein Indian mathematician Srinivasa Ramanujan has claimed that the sum of all positive integers is not infinite, but is instead -1/12. It’s wrong, it’s absurd”. I think that’s a little unfair. It depends on how you define the sum of positive integers. Ramanujan introduced “Ramanujan summation” and never claimed it gave the same answers as conventional summation. Ramanujan summation is useful and interesting! (But not for computing infinite sums in the usual sense).

Point noted Richard. But we all know that 1+2+3=6 and 1+2+3+4=10 et cetera. See this plus maths article for details. The bottom line is that the sum of positive integers just isn’t minus a twelfth. So I think the analogy is pretty good, especially since the article uses the Casimir effect as an example.However it’s a pity that it says “the vacuum isn’t empty, but seething with activity. So-called virtual particles pop in and out of existence all the time”. It isn’t true. Vacuum fluctuations aren’t the same thing as virtual particles. See Cathryn Carson’s peculiar notion of exchange forces part I and part II. Hydrogen atoms don’t twinkle, magnets don’t shine.

Hi John,

I am the author of the paper. Great job!

The amount of relevant information in the paper makes it very difficult to summarize without losing important data.

Great Job yourself Oliver! Yes, your paper was difficult to summarize. But heck, it deserves some publicity. And some time from a guy like me. The more people who say something about this on the internet, the better.

Bravo Oliver and John ! One of these days one or both of you will probably discover charlatans who were reading tea leaves, using Ouija Boards, and/or Phrenology. No wonder last years Nobel Dyn-O-Mite award in quantum physics went to a group of ASTROPHYSICISTS.

Thanks Greg. I was just reading the Nobel press release and noticed this: “The results showed us a universe in which just five per cent of its content is known, the matter which constitutes stars, planets, trees – and us. The rest, 95 per cent, is unknown dark matter and dark energy. This is a mystery and a challenge to modern physics”. I know what that is! Space is dark, it has its vacuum energy which has a mass equivalence, and there’s a lot of it about.

Yes Sir, you certainly do know, and have explained it most profeciantly at the most highest academic level for professionals and students; and most profeciantly at a much lower level for we gormless(LOL). Along with how gravity really works; what photons really are; what electrons really are; and many other facts and concepts that I now have a much better grasp of. By the way,your last essay on Oliver’s paper does not show up on my Physics Dective feed as a new topic,it only shows up in the comments section of the previous blog. In another week or so I will send in some more questions on current headlines, everyone please stay healthy until then.

Thanks Greg. I’m not sure I’ve got the photon quite right, but hey, it’s a start. Sorry I’ve been under pressure at work recently, and didn’t add the last two essays to my list of articles. I’ve now done that, and put in the NEXT links. Yes, stay healthy. The situation in Italy looks grim, and it could be like that here in the UK soon. It rather focuses the mind, methinks.

Much of this is completely true (I won’t say all, but only because then I would have to check every detail). However, it is possible to fix the the divergence problems of qed, using the rigorous mathematical methods of C19th analysis (taking limits. It is intricate by quite doable). I have shown how to do so in this paper. http://www.ejtp.com/articles/ejtpv10i28p27.pdf. The extraordinary thing to me is that physicists are not interested. They would rather sweep the issues under the carpet. Probably the main part of the problem is that doing so also shows that qed is really just relativistic quantum mechanics, that there is no justification for qft as a separate theory, that the idea that fields are fundamental is a nonsense, that there is indeed no reason or justification for renormalisation, and that pretty much everything currently taught about qft is based on the bits of qed which don’t work. It only gets worse in string theory, where they claim that it must be true that the sum of positive integers equals -1/12 because it is a physical result! (this was said in a famous numberphile video on Youtube)

That looks interesting Charles. I shall read that. Meanwhile can I say that it’s sad that physicists aren’t interested in how you’ve fixed the divergence problem. They aren’t interested in how gravity works either. Or what the electron is. I know too many good people who can’t get their papers published. The situation is not good, and meanwhile physics is withering on the vine. Because there can be no admission of fault, because that would be bad for physics. So they’ve been harming physics for decades. There’s a dreadful irony to it.

.

No, I think there’s no justification for QFT as a separate theory. I too think the idea that fields are fundamental is a nonsense. Yes, there is indeed no justification for renormalization. In fact I now think QFT is so badly wrong it’s should be abandoned. We should start again, from classical electromagnetism. I’d like it if there was some material here that you found useful. I’d like to see people like you with top notch maths working on some of the stuff I’ve dug up. Starting from the photon, then moving on to pair production, then the electron, and so on. I think there;s a necessary sequence to getting the foundations right, and that QFT doesn’t have any.

Go for it Charles !

O.K. John, time for another question, but not one based on headlines.

For quite awhile I’ve been puzzled by what does a nutrino actually do ? How does it make its living; what is it’s usefull purpose in the quantum world?

After scouring your past articles,other websites including Wikipedia, all I can come up with is a consensus of what most physicists consider a nutrino to be; what happens to it during and after annihilation occurs. Not what it does prior to annihilation.

I did come up with a crude,basic possibility,but I am not quite ready to be laughed out of town .LOL !

As far as I know Greg, a neutrino is something like a photon. It has no mass and no charge, and it moves at the speed of light. Only whilst a photon is a transverse-wave soliton, a neutrino is a rotational wave soliton. A breather. Something like the Large amplitude moving sine-Gordon breather. As for its purpose, I don’t know. Neutrinos don’t interact with normal matter much. Not like photons do.

Thanks John for introducing me to a new concept, that of breathers. They fit nicely into my ideas,at the least breathers don’t appear to contradict them. My rudimentary hypothesis is based on what I learned from you concerning knot zoos and knot theory. My interpretation of of the moebius strip/continous loops of pure energy going around and around inside of photons and elctrons,ect…are that in essence the knots are basic little machines: self- perpetuating engines/ motors. And what do many heat and friction producing motors possess to help combat excessive heat/friction? BALLBEARINGS and BUSHINGS ! I postulate that could be how neutrinos make their livings in the quantum world. Let the guffaws and belly laughs begin……..

I think of them as the twists, Greg. I think of the photon as a transverse wave soliton propagating linearly through space at c, and I think of the electron as a 511keV photon trapped in a “trivial knot” configuration. I think of the proton as the next knot, the trefoil, which needs a more energetic photon. The neutrinos come in when you mangle an electron into a proton to make a neutron. You need a twist to keep it in there. Only if the neutron is a free neutron which is not held tight by the surrounding protons, it works itself loose like a slip knot, and the components separate. Neutron decay is the jumping popper of particle physics!

Oh, o.k., so in a very basic sense nutrinos do serve as part of a twisting control process in the nuclei; and are sort of a waste byproduct of the decay process or annihilation process ?

I was concentrating too much on the more basic diagrams of knotts and was trying to apply my knowledge of macroscopic pioneering and sailing rope knotts to the quantum world. My nutrino would have been in the dead center of the energy knot,compressed into a smaller size, helping to control the flow(waves) of pure energy. And other compressed nutrinos in the outward loops as well, one for each color or flavor said of quarks, all at different wave lengths according to need. When let free they would merge into the one known larger traveling size, this would account for the three oscillating sizes.

I should’ve paid more attention to the diagrams and GIFs of the torus rings with waves within waves?

Still, it was great fun pretending to be a real scientist for a few days,thanks for putting up with me John !

LOL, always a pleasure Greg. As for sailing, you know how a sailor pulls on the rope to control the mainsail? IMHO that’s like the way a neutron in a nucleus is held tight by the surrounding protons. As for quarks, have you read the proton. I think quarks are the crossing points in the knots. That’s why you’ve never seen a free quark. And never ever will. I should pay more attention to TQFT.

Time for a few questions again, John.

1.) ATLAS experiments concerning the supposed Higg’s Bozo-ons supposedly decaying into beauty quarks ? ( There’s not enough quantum lipstick to slap onto this here beauty queen pig.)

2.) Heidelberg U. using cold atoms to construct building blocks to simulate complex physical phenomena? ( More virtual computer smoke and mirrors to prove another virtual theory ? ).

3.) Stephen Wolfram’s radical new computational concepts to explain super- symmetry? ( Is he trying to replace Einstein’s elastic space/stress-energy-momemtum tensor concepts; or just modify them ?).

As regards number 1) Greg, I’d say this: when a church needs a miracle, a church gets a miracle. It was similar for the W boson and the Z boson. Alexander Unzicker’s book is well worth a read, as is Gary Taube’s article. I don’t know what to say about 2). I had a quick look and saw http://www.lithium6.de/ which looked OK. Can you give me a reference? As for 3) I read about that last week and thought it looked interesting because he was starting simple and he said energy is real. However when I floated out an email, I got this reply:

.

Thanks for reaching out to us! We really appreciate you taking an interest in our project. As I’m sure you understand, we’re concentrating on all the work we have to do in developing our own theory, so we don’t expect to allocate time to studying alternative theories.

.

Alternative theories! When I’m the guy who’s forever quoting Einstein? Grrrr! Then there was this:

.

If you think that your work directly informs what we’re doing (e.g. maybe it gives us deeper mathematical insight into the limiting structure of the multiway causal graph, perhaps it provides us with a more precise understanding of the correspondence between our models and the holographic principle…

.

The holographic principle! At this point I thought FFS, these guys are a bunch of quacks who know nothing, and who aren’t listening. Not like Robert Close, see https://www.classicalmatter.org/. I was clearing out drawers the other day and came across his 2009 elastic space paper: https://link.springer.com/article/10.1007/s00006-010-0249-1. Now there’s a guy who deserves a trip to Stockholm.

The 2.) question about Heidelberg U. was based on an article from Phys.Org , dated April,27th.,2020, John.

Concerning 1.), you have clearly stated many times that carrier particles are bogus, I basically needed your conformation that I was reinterpreting things properly. I am just amazed by the length of time and the huge amount of money CERN keeps blowing thru perpetuating their myths. What would you suggest the LHC be looking into instead ?

3.) As usual both of the links concerning Robert Close is 99.9% over my head. But I did comprehend that his research affirms the ” screw nature ” of the electromagnet spectrum because of rotational waves; the wave nature of matter; and that the aether is definately a ” something ” and not a ” nothing “. All in line with our beloved Uncle Albert’s theorems, just with a mostly new and exiting mathematical manifold interpretation?

Thanks Greg. The PhysOrg article didn’t say much: https://phys.org/news/2020-04-quantum-electrodynamics-major-large-scale.html. It refers to this paper: https://science.sciencemag.org/content/367/6482/1128 which is available here: https://sci-hub.tw/10.1126/science.aaz5312. I didn’t like it. They claim they’re proving gauge invariance using cold atoms, when gauge invariance was a retrofit. Electromagnetism does not work because of a some mathematical symmetry or gauge invariance. They talk of electric fields and magnetic fields, not the electromagnetic field. They also talk of gauge fields and matter fields, even though the only field we’re dealing with is the electromagnetic field – either in the guise of a photon field variation or an electron standing field. They don’t know that the electron is field. There is no understanding of fundamental physics here. I’d say the experimental side of this is OK, but the theoretical side is cargo-cult mumbo-jumbo. .

.

.

Yes, those messenger particles are lies to children. They do not exist. Hydrogen atoms don’t twinkle. The electron and the proton attract one another because each is a “dynamical spinor”. Not because they’re throwing photons back and forth. As for what CERN should be looking at, I’d say it’s the history. If they read the papers I’ve read, they’d know the Standard Model is wrong. Perhaps some do, but they’re in a hole. Being critical of the Standard Model is viewed as being critical of particle physics, which is viewed as being critical of physics. The situation is not good.

.

I think Robert Close is in line with more physics than people realise. What isn’t, is the Standard Model. It’s portrayed as a really successful theory, but it’s pretty much isolated from the rest of physics. When you’ve read up on classical electromagnetism, it’s amazing how different QED is. And amazingly, QED employs point particles. Even though it’s the wave nature of matter. See

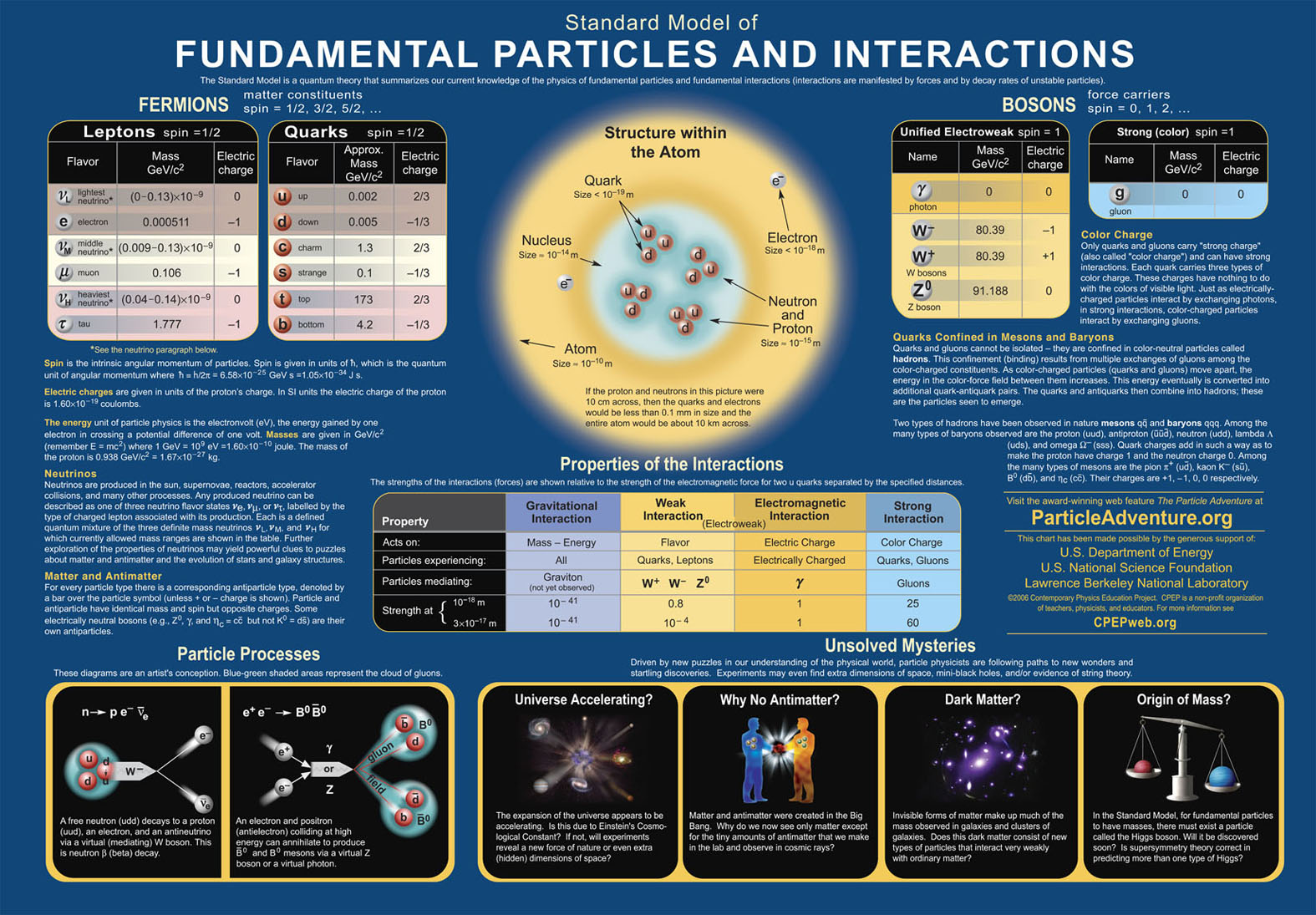

Thanks again John for the clarifications,and also the link to the really cool chart. That chart is exactly what I need to study for a few steady weeks. Quiz on Friday you say ?

That chart is yet more cargo-cult garbage, Greg. See the bottom righ corner where it says the origin of mass is an unsolved mystery? That’s bollocks. Because of a certain little something called E=mc². The mass of a body is the measure of its energy content. The Standard Model flatly contradicts that. It’s another example of how the Standard Model is pretty much isolated from the rest of physics.

Oh, man am I ever in over my head. But I am still having fun,and slowly but surely learning.

This paper was awesome , i read through it… do you think that the global assumptions based on QED theory like the highly oscillatory phases of water may also contain some mistakes due to the shortcomings if the same theory??

Abhinav: I don’t know about the highly oscillatory phases of water I’m afraid. But I’d say that yes, some of the assumptions based on QED contain mistakes. For example, messenger particles are a mistake. Like I said in a comment above, see Cathryn Carson’s peculiar notion of exchange forces part I and part II:

.

https://sci-hub.st/10.1016/1355-2198(95)00021-6

https://sci-hub.st/10.1016/1355-2198(95)00003-8

.

She said the exchange-particle idea worked its way into QED from the mid-1930s, even though Heisenberg used a neutron model that was later retracted. Another mistake is the assumption that light doesn’t interact with light. It does. See this article of mine:

.

https://physicsdetective.com/how-pair-production-works/

Hey thank you so much for responding, I take it that your quite good at particle physics, I have one request, I hope you could answer it….

I saw this article that makes heavy assumptions based on QED, like something like formation of an energy domain or such, and it connects it with consciousness which is super weird, I did ask a neuroscientist and he said he couldn’t make any sense with the physics part of it because the author delves very very deep into parts of physics which I’ve never heard off such as Energy Quanta-Gradients and stuff like that, and to me it seems pretty pseudosciencey as it is published in a non peer review Journal, however if you don’t mind you could please point out some of this mistakes(if any) this author makes on the QED theory? I would really really appreciate it, I’ll link the article down below:-

https://www.researchgate.net/publication/344348924_Mind-Brain-Body_System's_Dynamics_Open_Access

Feel free to skip to Tension vs Energy Domain part:) the reason of it has nothing to do with physics i think*

Abhinav: I took a look. The stuff about the mind seemed to be OK. But as for the stuff in Tension Domain vs Energy Domain, I didn’t recognise it as physics. It’s as if the guy is throwing in everything but the kitchen sink and making it up as he’s going along. For example he talks about QED in section 3.2:

.

The phenomena of interference between the oscillatory modalities of the energy flows and impulse involved in the perturbation/excitation of the zero point quantum field comes to be affected by coupling-phase (oscillatory resonance), which, according to QED (Quantum Electrodynamic Field theory), is able to trigger the phase transitions that lead to the exited energy-quanta and impulse gradient distribution of oscillatory motions and charge densities (referred to as EEQ-GD, that is Exited Energy Quanta-Gradients Distribution) and to the structuring of matter (Coherence Domains vs Incoherence Domains)..

.

I just don’t recognise that as physics. In fact, I’d go so far as to say it’s all smoke and mirrors and fancy-Dan big words meant to impress, and Emperor’s New Clothes. I got as far as page 29 before thinking FFS, I’m not reading any more of this crap. See this:

.

Figure 4. The human energy-tension basin of attraction, i.e. the mind-brain-body (de-localized) system, reproduces the fundamental structure characterizing the EEQ-GD, which in turn reproduces the fundamental structure of the structured tensive domain.

.

It’s horseshit.

Thank you for responding ? even i think there are huge problems with it, does QED even state anything like Energy Quanta-Gradients and stuff like that?

Thank you for responding, I share the same thoughts, it just makes me wonder how did he come with such words if he is a physiotherapist and even expert physicists like you can’t make sense out of it?? also is there anything known as Phase Conjugate Dynamics or Tension Conjugate Dynamics? I think the Tension part fails because you told me the tension domain was sh*t but is there really anything like that in physics??

“1947 shelter island conference” – I had to look that one up.

On wikipedia I read about that findings contradicting the Dirac Equations this beauty of a sentence:

“… As it was known that the Dirac theory was incomplete, the small difference was an indication that quantum electrodynamics (QED) was progressing.”

THIS, my friends, is intellectual dishonesty cranked up to the max! – it is on of the most pure examples for sophistry you may ever come across…. a finding contradicts a theory – “therefore the theory is making progress”. It hardly gets any more anti-logical,. anti-rational, anti-scrutiny than that. A contradiction that reinforces that which it contradicts.

If theory A suggests “x” and experiment B contradicts “x”, then A didn’t take a step forward, in contrary, it was being presented a major stumbling block (i); even if some scientific quak may come up with a sleazy way around such obstacle, it is a major win for bull-shittery already, that this sentence even forgoes THAT sort of in-between step, and presents the contradiction as if it was confirmation. First class BS!

This reminds me of so so many times I saw science-explainers or -educators present absurd nonsense as the finest of scientific achievements. Even worse, I often find, that the word “paradox” has become to mean the polar opposite of what it actually meant: rather than pointing towards a contradiction, that threatens the theory (your theory says something paradoxical (see “reductio ad absurdum”)? – well: back to the drawing board, then!), it is seen of a glorious opportunity to “learn” something. It boggles mind, or even worse…

I have the sneaking suspicion, that people actually studying in this field get conditioned, by sophistry like this, to favor contradictory findings over intellectually homogenous ones, i.e. those theories that are intrinsically wonky over those which are borderline self-evident (and it paralyzes critical thinking, as they learn to mistrust their intuition of what is absurd or not and their instinct of searching for that very thing).

Whenever I see “infinity” being treated like some meaningful thing, rather than a contradiction in itself (and an admonition, that one was on the wrong track!), I see the same conditioning at work. They rejoice over mystery (nil admirari! – THAT should be their motto; but, alas, it is the opposite…), feel validation in headache inducing intellectual inhomogenuity. Their mode of thinking is strikingly similar — to that of religious people, marvelling at ideas like people walking on water and healing the lame, the picture of some virgin birth and prophets talking to burning bushes, etc.: credo quia absurdus est! (literally “I believe because it is absurd!”),

Since you mentioned Ramanujan: the fact that he pulled off that famous example of sleight of hand (ii), where an addition of all numbers was equal to “-1/12th”, says a thing or two about the nature of infinities themselves: they are absurd! – and in a mathematical system w/o zeros, I’d argue, there would be no such thing as an infinity. Both fictions are the 2 sides of the same absurd coin (but don’t bother arguing with mathematicians, that “x divided by zero” equals “infinity”; it’s pointless). Whilst the invention of zero may have made possible an incredible amount of discoveries, doesn’t change the fact that it is a piece of fiction. Nature knows no “zero”s. It only knows “somethings”. And when you theory turns out some infinity and/or a singularity, it should give you a hint, that something is — off [about it] . . .

i) Granted: falsification is the way forward in all things science; but it is usually the death-sentence for one theory [or another] at the same time; and THAT VERY THING is the actual progress in question. The darwinian principle at work with bad ideas/theories…

ii) I wonder if he secretly laughed at the idea, that anyone would take that little magic trick seriously…

I share your sentiments, praetorian. There’s a whole load of garbage being touted and spouted by people who ought to be serious scientists, but instead are f*cking charlatans. I now think Alexander Unzicker was right, and we should cut physics funding. That’s because we’re funding people who are actively getting in the way of scientific progress. Darn, the wife is calling, I’ve got to go. Time for a glass of Malbec! I’ll get back to you properly tomorrow.

Praetorian: I am not a fan of Dirac. Not at all. He was around when de Broglie, Schrodinger, Darwin, and Born and Infeld were coming up with realistic electron models. But he sided with the Copenhagen quacks who “won the argument” and promoted shut-up-and-calculate and quantum physics surpasseth all understanding. Those guys screwed up physics for a hundred years. Dirac was still saying the electron was a point-particle in 1938. Even though there was a wealth of evidence for the wave nature of matter. The guy was a total charlatan. That intellectual dishonesty is worse than you think. You only realise it when you read the old papers. See for example Born and Infeld’s 1935 paper on the quantization of the new field theory II. On page 12 they said this: “the inner angular momentum plays evidently a similar role to the spin in the usual theory of the electron. But it has some great advantages: it is an integral of the motion and has a real physical meaning as a property of the electromagnetic field, whereas the spin is defined as an angular momentum of an extensionless point, a rather mystical assumption”. On page 17 they said this: “we think that the value of Dirac’s theory lies more in mathematical advantages than in its physical significance. It is well known that other systems with spin ½ do not obey Dirac’s laws; even the simplest one, the proton, has properties contradicting the consequences of Dirac’s equation”. And this: “the rest-mass occurring in our theory is not, as in Dirac’s, an absolute constant of the system but the total internal energy, depending on rotation and internal motion of the parts of the system. An external field will influence not only the translational motion, but also these internal motions”. On page 23 they said this: “in the classical theory we got the result S = D x B = E x H”. They’re talking about the Poynting vector there, a wave in a closed path. Dirac MUST have known about all this.

.

I don’t think people actually studying in this field get conditioned by sophistry like this. I think they paint themselves into a corner and dig themselves into a hole with their own bullshit and lies. You need to read Unzicker’s book The Higgs Fake. The big takeaway is that particle physics has form. Just about all the “discoveries” since the sixties have been faked. Again, the situation is worse than you think. They rejoice about mystery because that’s something they can sell. Quantum Mysticism. Snake Oil. Woo. Yes, the mode of thinking strikingly similar to that of religious people. In days of old we had fat bishops peddling twaddle and living a life of ease. Now the people who occupy the same socio-ecological niche are called physicists. Only they aren’t physicists. They’re imposters.

.

Yes, when your results in some infinity or singularity, or some paradox, it should give you a hint that something is wrong. But people like Penrose just keep on going like the Duracell bunny, pumping out nonsense like wormholes and time travel and a black hole being some kind of gateway to the parallel antiverse. Yes, falsification is vital for science, which is why the charlatans have been trying to come up with “theories” that can’t be falsified. The more I’ve learned about physics, the more I’ve realised how corrupt it’s become. The $64,000 question is: how do you fix it?

How to fix it?

That’s actually quite “simple” — yet hard to achieve on a political, sociaological and economical level (and for matters of psychology, too); as systems that “work” (even where they are build on falsehood and produce nothing but misconceptions and lies), tend to be self-sustaining: [more or less] all parties involved have a vested interest to keep things going as they are, as one hand washes the other and vice versa…

So how do you do it?

Drain the swamp! Defund science! (I think to have read something like that one your page as well?) Let the leeches starve!

Only then, could something better grow in their stead…

When Schopenhauer was frustrated, with the mass appeal that the charismatic dazzler and babbler Hegel received (a philosopher with an anti-intuitional AND (!) anti-rational mode of thinking, that is very “reminicscint” of the modern scientists you so vehemently oppose; one could even call him their spiritual predecessor!), and the little popularity he himself garnered, it soured him on university philosophy as a whole: he turned his back on it and began to see it as an existential problem. He developed his doubts, if those “Universitätsphilosophen” (which he deemed outright antitheticel to actual philosophers) were ever to produce anything of value for the advancement of understanding [of nature] and for humanity as a whole.

Similar things could be said about the opposition of modern academia vs. the intrinsically breadless “profession” of a true scientist (or not even a “profession”? Rather an arduous calling full of privation?); or maybe “nature philosopher”? – we do need a “new” name for it, after all, since the other one has been stained by those leeches, who claim for themselves to be their true incarnation…

Similar things could also be said about modern art.

The thing is, that any institution attracts greed and lowly creatures who are all about the advancement of their own fame, popularity and monetary gain. The more money you pour into the system, the more corrupted it may become.

It is enough these days to be a good babbler, in order to sell your “art” as “art”. Paint a black stripe on a white canvas and “sell it”, ostentatiously, to the gullible crowd as something magnificent, and esp. the rich “connoisseur” and “patron of art”; you know: just rich folks, idiotically rich numbskulls who don’t know what to do with their filthy huge riches: “Just invest the surplus into art; if nothing else, it’s a good way to dodge taxes!”

But that, ultimately, corrupts art.

It brings to the foreground those, who talk big and lofty about their craft, who know how to sell themselves and their “works”, and drowns out the silent genious that actually IS all about his craft.

I see huge parallels between this modern blight and those other two.

Babblers are all the rage; the meticulous talents however are pushed aside.

Too many young people attending universities, also is a problem.

The really important stuff, true science (and philosophy… and art…) can [and will] be done by only a small flock, the happy few; that is, highly intelligent, deep minds, paired with an irreplaceable Tatsachensinn (sense of facts; rational mindset) to sort the good from the bad, while the many can only ever flatten and stupefy deep insights (even turn them into religious dogma at times).

There is, however, a hardheaded prejudice in the way of any [hope for] change in this matter; that is the preconceived notion of “progress” [of humanity and of science]. That, all the technological advances that humanity made were somehow elevating it to a higher form of being, that we understand more and more each generation (and do not share in previously widespread misconceptions, superstition and immoralities), that humanity was more civilized and intelligent than it has ever been before! Frankly, that prejudice is stupid; it may not only be wrong, but – even worse – the very opposite of what is happening.

As I see it, our technological advances make humanity dumber (and blunted!), not more intelligent, as “the many” can rely more and more on those technologies (something that was true long before smartphones and google-search!), become reliant and even dependent on it, with all their being.

Any criticism of modernity as we see it today, will face doubly the backlash because of this; on the one hand, the stupified masses will readily oppose it, happily flocking to fake authorities (professors of whatever field; academics; “Halbgötter in Weiß” (?demigods in white?); all in all: appeal to authority is easily among the most detrimental commonplaces there are! Just compare Covid19 scaremongering…) — the more [the stupider] the merrier; like zeroes tied to a random number, they exponentiate their power.

On the other hand, it’s the stupidity of the individual people themselves, the ones you try to convince, and their relative lack of understanding; it surely helps, that modern science is fragmented so much, that harldy anyone (noone?) can permeate all those fields and keep an overview and understanding of it all. So, even if one were to came along, who manages this feat more than anyone else, he’d still have to have his insights catch on with atleast some people, the right people, with the right mindset AND the right access to anything or place to make a change. But the opportunistic academics will stand in their way; will have each others back with a common goal in mind. To further their own progress and secure their own “sinecures” of science, by holding the line with others. Like it was a war, and they were a hoplite-phalanx that must not break, a phalanx of “science” itself, … but actually just their own, selfish interests!

So… how do you make a difference against those vested interests, to drain the swamp?

Quaeritur…

One needs allies, that’s for sure…

– – – – –

Sokrates: “[…] but both our ancestors, and us, and our posterity are all quite equal, and I think that the spectacle of the world would be very boring for someone who saw it from a certain perspective; because it’s always the same thing.”

Montaigne: “I would have thought that everything is in movement, that everything changes, and that different centuries have different characters just like men do. For, doesn’t one find knowledgeable centuries, and others that are ignorant? Aren’t some naive and others more refined? Aren’t some serious, and others playful, some polite and others vulgar?”

Socrates: “That’s nothing. Clothes change; but that doesn’t mean that the body changes too. Politeness or vulgarity, knowledge or ignorance, more or less of a certain naivety, a serious or playful mentality, are all only the outside of man, and all of them change; but the heart does not change at all, and all of man is in his heart. One may be ignorant in this century, but trends change and taught people come along; someone has self-interest, but the trend for being disinterested will never come. Out of the prodigious number of unreasonable people who are born in a hundred years, nature makes maybe two or three dozen reasonable ones that are spread over the earth, and judge for yourself whether that is enough to make a trend of virtue and right action.”

Montaigne: “This allotment of reasonable men, need it be spread out equally? Isn’t it very possible, that some centuries have more, while others have less?”

Sokrates: “There may be at best hardly noticable fluctuations. Nature’s makeup as a whole is rather evenly.”

(B. Fontenelle, “Nouveaux Dialogues des Morts”, III.; … I tried to find an english translation; but as I didn’t like the wording at times, I adjusted it to my understanding; not to the French original, as I do not speak french, but to a German translation I have.)

If anything, in the age of 8b people, nature has an even harder time than ever, to seed enough of those happy few among them, to make a difference. The age of the masses is the age of the demagogues and frauds; they drown out the more nuanced voices, and find lots of material and gullible sheep to further their narcissistic agendas…

Philosophy and science (“nature philosophy”) will always be a calling and a breadless profession, more so than a fruitful business. It only gets worse, when that lofty name is monopolized by said academical frauds. One’s gotta be divorced from the expactancy to “make a living off of it”, when approaching the secrets of nature… otherwise the inevitable compromises can only corrupt their theories and findings.

– – – – –

PS: I’d really like to have a face-to-face talk with you about this, tbh.

I am kind of at an impasse [right now] in my endeavor to catch up with modern physics; esp. the imbalanced accent on “everything math” gives me a headache at times (i); it seems like the geeks, rather than geniuses, have taken over the entire field, to redefine, what “insight”/”understanding”/”truth”/”fact” even MEANS…

Albert Einstein was a monster NOT JUST of critical thought and intelligence and rational intuition, but ALSO of I-MA-GI-NA-TION, of “Vorstellungsvermögen”[!] … something these barren “specialists” and scientific epigones most obviously lack…

Yet, THEY ARE the “gold-standard” of science these days, the gravity of scientific accolade; like a black hole of collective understanding…

i) as if deriving entire theories from mathematical formulas [alone], wasn’t just doubling down on preconceived “understandings” of the matter; no formula is ever any better [, any more understanding/insightful], than the perspective which cooked it up in the first place [or than the individual intellect who did that!]; math is NOT a universal “language” of science and nature! – not a untainted truism beyond the limits of subjectivity and misunderstanding! It is just formalized speech! It is in no way deeper and purer and a direct venue to essential truth (facts of nature), than “formal logic” (ii) was a reformation of irrational patterns of everyday perception and judgements!

ii) i.e. a certain preoccupation of some academic philosophers; a formulaic approach to “logic”. In my eyes: the training-wheels of rational thought, i.e. the wrong approach to actually reform an irrational mind. A purely academic excursion/pursuit…

CG: I have read every inch of your comment. I agree with what you are saying.

.

I think people are rather strange. So much so that I sometimes wonder just how rational they are. They have a very bad habit of holding some convictional belief in things for which there is no evidence, and then dismissing any evidence that poses a challenge to that belief. This trait is IMHO particularly noticeable when they have been subject to “catch ‘em young” indoctrination as children. They grow up believing in something with total total conviction, and it is then very difficult to persuade them to examine that conviction. Scientists are not immune to this.

.

On top of that, it would seem that people are very good at believing in those things that benefit themselves. They readily become what we label “vested interest”, and will jump through tortuous hoops to defend the thing that rewards them.

.

On top of that, I would say that some people exhibit another trait: they like to tell other people what to do. So for example we have Christian bishops living in palaces, telling the poor to surrender their wealth to the Church and turn the other cheek, whilst the bishop himself does nothing of the sort. And it’s not just bishops. We have princes telling people to live in hair-shirt eco-poverty whilst they themselves fly 200,000 miles a year in a private jet, spend a million pounds a year on helicopter flights, and never do a day’s work in their life. We also have eco-warriors gluing themselves to roads as part of their “stop oil” protests, and yet they still travel by car and take three foreign holidays a year. These control freaks just love telling other people what to do, and are utterly convictional and hypocritical about their cause. And misguided too, because they never consider the role of population growth when it comes to things like deforestation, desertification, and habitat loss. They always say it’s caused by climate change, when often it is not. They seem to be incapable of rational thought. We see something similar with “wokery”, which I think is absolutely astonishing. Particularly when it comes to trans matters. One hears quite bizarre stories regarding so-called “woman” committing a serious offence against a genuine woman, only the NHS say there could have been no such offence because only women were present on the ward. There are similar issues when it comes to things like race, wherein racism is fine and dandy provided it is directed towards caucasians, and All Lives Matter is somehow racist.

.

Academia exhibits all these traits. Some well-paid professor will believe in some nonsense, and will brook no challenge. Put your hand up in a lecture and suggest that he is wrong, and he will go white round the gills. These guys see themselves as the font of all wisdom, and are so full of hubris it’s just not true. They will happily tell lies to children as they pimp their latest unsupported speculations to a gullible popular press. They do this for year after year, hiding behind mathematical smoke and mirrors, and digging themselves ever deeper into a hole that damns physics and turns it into pseudoscience. They will never admit the truth, hence science advances one funeral at a time. It was of course Max Planck who said this, so this does not apply to all physicists. But I would say it applies to all senior physicists in particle physics today. The only way to stop them is to expose the scientific fraud of bump-on-a-graph “discoveries” and stop their funding. Stop paying these people to spout nonsense and get in the way of scientific progress. Tell the public what a racket the journal system is. That’s essentially what Alexander Unzicker said in The Higgs Fake. The more I learn the more I think he’s right.

Hey I don’t understand, are you for or against Oliver’s paper? ?

Hi just one last question, I’m sorry if I disturb you, he uses this same “Mind Body Dynamics” thing to explain NDE’S(Near Death Experiences). I’m interested in learning what happens during these NDE’S but using his phase conjugate dynamics and spin conjugate dynamics he has tried to explain something, which is based on this model.

https://www.researchgate.net/publication/328738002_Near_Death_Experiences_Falling_Down_a_Very_Deep_Well

This is his article, its very huge so you can just read “NDS’S Out of body experiences” and tell me if his physics makes any sense to you( only if you have the time)

I sent this to the famous neuroscientist and NDE Researcher Bruce Greyson and he said that he couldn’t make any sense of what the author was saying. Also regarding the EM sensory module, greyson pointed out there was no such thing

As much as I am interested in learning about NDE’S I don’t think this is a way to explain it…..

I don’t think what he’s saying makes ANY sense whatsoever but could you read that paragraph and tell me? I would really appreciate it, also thank you for taking your time to read and answer, I’m very grateful

I downloaded the 80-page PDF and searched on Out of body experience, then read half a dozen pages starting from page 37. The physics is claptrap. Note that I’m not saying out-of-body experiences are claptrap. I do think there are things we don’t understand, and we shouldn’t dismiss something just because we think it’s impossible. But this guy is pretending to understand, with nonsense physics that he’s making up as he goes along. No more, please!

I’m very sorry for disturbing you I was just confused ?, also I’m very very thankful that someone who is actually an expert in physics responded, THANK YOU VERY VERY MUCH???

My pleasure, Abhinav. Maybe you should take a look at Rupert Sheldrake’s stuff. He wrote The sense of being stared at. Many’s the time that I’ve admired some woman in the street, and she’s turned round and caught me staring at her… assets. LOL!

Lmfao?? kinda described what happens with my crush eh, also your sure that the guy’s article is sent you is Bs right? I mean does QED even state anything like that lol

Yep, it’s BS. The guy is totally winging it.

Do you think these mistakes will affect the QFT theories like quantum field vacuum?? Or zero point field?

Joshua: yes. See Svend Rugh and Henrik Zinkernagel’s 2002 paper on the quantum vacuum and the cosmological constant problem. They kindly remind us that photons don’t scatter off the vacuum fluctuations of QED. They say that that if they did, “astronomy based on the observation of electromagnetic light from distant astrophysical objects would be impossible”. Hence the QED vacuum energy concept is “an artefact of the formalism with no physical existence independent of material systems”.

So your saying that it’s impossible for such a field to exist?? Also I saw a comment on messenger particles , do you mean these particles don’t exist?? Or they don’t do what we assumed them to do??

Also something about water was also there in one of the comments, QED theory is used in this

https://www.researchgate.net/publication/279066458_Illuminating_water_and_life_Emilio_Del_Giudice

Will the messenger particles flaw distrupt this assumption?

Joshua: I’d say the zero-point field doesn’t exist the way it’s described. See the Wikipedia zero point energy article and note this: The idea that “empty” space can have an intrinsic energy associated to it, and that there is no such thing as a “true vacuum” is seemingly unintuitive. I don’t think that’s unintuituve. I think it’s intuitive, because I think space and energy are the same thing. However see this: According to quantum field theory, the universe can be thought of not as isolated particles but continuous fluctuating fields: matter fields, whose quanta are fermions (i.e., leptons and quarks), and force fields, whose quanta are bosons (e.g., photons and gluons). All these fields have zero-point energy. I think that’s wrong, because I think a field is state of space, and a fermion is just a boson in a closed path.

.

Messenger particles definitely do not exist. Hydrogen atoms don’t twinke. Magnets don’t shine. As for the researchgate paper, I’m afraid I stopped reading when I saw this: Excited water is the source of superconducting protons for rapid intercommunication within the body.

Also does this mean that every assumption based on QED is wrong??

I wouldn’t say every assumption based on QED is wrong. I’d say certain aspects of it are wrong. See for example my article on the hole in the heart of quantum electrodynamics. Of course, the $64,000 dollar question is how much of it is wrong? I find it hard to say, particularly since I think Topological Quantum Field Theory is essentially correct.

Alexander Unzicker has done a video on Oliver Consa’s paper here:

.

https://www.youtube.com/watch?v=wvz4MRpq6xs&t=630s

.

He also talks about the “collective insanity” of quantum field theory.

Unzicker opens a can of worms with his list of videos. One is a 3 minute proof that the earth is not flat. So he’s fair game to any criticism as far as I’m concerned.

The video documentary ‘American Moon’ pretty much proves the Apollo moon landings as being fake.

There is also a lot of experimental evidence that rockets don’t work in space.

I have issues as to whether orbital mechanics makes sense in the light of how ballistics works

So pretty much anything NASA has done is BS.

Then we have nuclear bombs which hardly has any evidence that they work other than photos in WW2 and Trinity and tests.

Particle Accelerators…bah I see nothing but nonsense

ITER Fusion experiment…bah I see nothing but a make work project

Unzicker and ‘Physics detective’ are just controlled opposition. The physics fraud extends well into Newton, Kepler, Galileo…

Einstein has nothing at all to offer. Relativity is all bullshit.

Even the Kinetic Energy formula E = vmv/2 is complete nonsense. There is no experimental evidence to support it.

Lies to children? You and Unzicker just want to reestablish Einstein? Is this some kind of joke?

GREAT SCOT ! Marty my boy, Q-Anon has finally invaded the Physics Detective ! Let’s call Buckaroo Banzi and His Lost Planetary Airman for the rescue while running to our survivalist bunkers. Because Earth Girls really are Easy ya’ll……………

Maybe somebody is impersonating a previous poster, Greg. There’s a lot of odd people around these days.

Tell us about the fraud of the Kinetic Energy Formula in every high school text.

Some detective

It’s just a stopping distance thing, Physics Hermit. Apply some constant braking force, and momentum is just a measure of the time it takes to stop your cannonball in space. Kinetic energy is just a measure of the distance it takes to stop your cannonball in space, That thing we call c is just distance over time. A conversion factor. But momentum and kinetic energy are just two sides of the energy-momentum coin. Read this and work it through:

.

https://physicsdetective.com/the-mystery-of-mass-is-a-myth/

No, I don’t need to read anything. I don’t even need to understand physics and math at all.

The KE formula does not pass the smell test. Velocity squared indicates that its really important in determining “energy”. Yet I don’t see any evidence in real life. Pro Bowlers aren’t throwing small balls really fast to knock down more pins. Small Wrecking Balls aren’t swung really fast to knock down buildings more efficiently.

Its all bullshit peddled by Leibniz and some french woman. This is not Newtons work so why do you defend it? The KE formula is experimentally defended by some absurd clay experiments. The simple truth that KE formula is total bullshit is enough to toss every single physics book into a bonfire. You can’t possibly be serious about physics defending such crap.

P.S. I’m the say guy as before who criticized Winterberg’s absurd fusion bombs

But yet really small bullets travelling at extremely high velocities can transfer high kinetic energy to whatever it hits. Can not the mass/velocity ratios be adjusted to any balance/imbalance one wants? If you are interested in a relatively small,lightweight but dense projectile travelling at obscene velocities; then I highly recommend watching the declassified YouTube videos on the U.S. Navy’s railgun project. You too John ! (P.S., my youngest son Paul is a professional welder and has helped construct large parts of the overall assembly).

“Can not the mass/velocity ratios be adjusted to any balance/imbalance one wants?”

What a bullshit answer. And what does railgun project have to do with this?

The question remains that p = mv is experimentally proven. KE = 1/2vmv has not. In fact, in its history it started as vmv and was complete nonsense. Then the one half has added in front of it. Still ludicrous. Physics has not got a lick of credibility with the kinetic energy in every textbook. Lies to children. I guess the Physics “Detective” really doesn’t give a shit about the truth and neither do you.

I care a great deal about the truth. Like I said before, read https://physicsdetective.com/the-mystery-of-mass-is-a-myth/ . The ½v² in the kinetic energy equation KE = ½mv² is an integral. The easiest way to understand it is via a cannonball in space being slowed down by a constant braking force, provided by uniformly-spaced sheets of cardboard. When ithe cannonball is going fast it punches through more sheets of cardboard. The number of sheets relates to the stopping distance, and to the total damage it does, which relates to the kinetic energy. The momentum p=mv relates to the stopping time. Both the kinetic energy and momentum are two sides of the energy-momentum coin. You can’t take kinetic energy away from your cannonball without taking momentum away. Then you apply this to a wave, and take note of the wave nature of matter. After that you know that the Higgs mechanism is complete nonsense.

My mass/velocity, balance/imbalance comment was in response to your own large/small , fast/slow bowling ball/wrecking ball anologies. In reality anyone can increase/decrease velocity/mass for various results,some results obviously better than others. Besides you forgot to mention practical reasons why people don’t bowl with 100lb.balls or bring down buildings with 50ft. diameter wrecking balls.

Also, you are the one who initially brought up ballistics, so I thought you might be interested in how and why a tungsten alloy bolt that weighs 3.2kg at rest can be launched to a velocity of 3,390 m/s for a whopping 18.4 MJ at first impact. So what formula and wording would you use to describe this fake Kinetic Energy?

The so called “renormalisation” of QED is based on another dubious “principle” of physics: gauge symmetry. Without the “gauge symmetry principle” renormalisation does not work. Another very rotten aspect of physics is the forgotten Coulomb field propagation velocity. Measurements clearly proved that the Coulomb field (which is supposed to be a quantum entanglement of “virtual photons”) propagation velocity is much higher than the speed of light. According to QED it is even ‘infinitely fast’. However, the classical “gauge symmetrical” Maxwell-Lorentz field theory predicts that also the Coulomb field has a ‘retardation’ velocity equal to ‘c’. So the classical field picture, that emerges from countless of “virtual QED photons” is inconsistent with Maxwell’s Classical Electrodynamics theory (CED). However, an improved CED theory that correctly predicts a propagation velocity v>>c for the Coulomb field, is not longer gauge invariant/symmetrical (!!), which consequently removes the ‘renormalisation premise’ of QED. See my paper on General Classical Electrodynamics.

Koen, I see it: https://www.researchgate.net/publication/311439664_General_Classical_Electrodynamics

.

Maybe vacuum fluctuations/energy are merely the propagation of analytic errors – in a similar way error terms in a computational fluid dynamics simulation can dominate the results, and lead one to believe there is some fluid response where none exist in nature.

%%

Here is my blog post :: link building services – Bruno,

Hurrah! In the end I got a website from where I can really take valuable data concerning my study and knowledge.

Also visit my web-site :: ascg

Little change of subject…

.

Didn’t you feel like writing about the most recent nobel-price in physics? I was sure, that you’d have your thoughts about that; the entanglement folks (of all people!) getting, what the usual suspects call their long overdue recognition. Having “proven Einstein wrong”.

.

In fact: that announcement was the very reason I remembered your homepage; which I never got around to reading in full. Mea culpa!

I thought: “that guy must have some strong opinion about that decision, must he not? After what he said about the Higgs-promoters being frauds!” (maybe not your exact phrasing)

.

Because for me, they always left me wanting. And whenever I stumbled over their [thought]experiments, like with Greene (“Fabric of the Cosmos”), all i could garner from it was, that those people had weird ways (i), of tricking themselves (!), into believing very irrational ideas. Elaborate ways to circumvent their own critical thought…

.

They never had me convinced… Why, like with the famous “Bell inequality” (you don’t seem to mention the term in any article of yours?), should we ever expect like they pretend we had to expect?

Why explain like they say we have to explain?

Their interpretations seem circular reasoning to me. (Fruitles, self-absord little mind-games!)

– their thought-experiments nonsensical.

.

Just yesterday, on the “sixty symbols” youtube channel, I stumbled across their “entanglement” conundrum; how do they even arive at a setup where, allegedly, they “turn” their “up or down spin” test by “120 degrees”? There seems to be so much INTERPRETATION in this (layers upon layers), that any presentation of their “experiments” always seem to stay caught up in the abstract, rather than giving me the actual setup…

.

One of the things that seems obvious to me is, that their idea of a “random” decision by the particle to be “up or down” is one of the most bizzare assumptions in all of science!

Randomness does not exist in anything we interact with or experience in our lives, other than through subjective [mis-]interpretation (ii); why would it be any different on the quantum level?

Because “QM works in mysterious ways”?? You gotta do better than that! Their taste for “mystery” is antithetical to the very spirit of science/the scientific mindset!

.

“Extraordinary claims require extraordinary evidence”; yet they never provided that!

It never was “EPR”s duty to prove “hidden variables” (allthough their attempt to come up with tests and solutions for that actually shows a true scientific spirit! A spirit alien to the followers of the Kopenhagen interpretation, as it seems, and to their snarky leaders…), to disprove a baseless assumption (the onus of proof was never on EPR!); it always was the “spooky action at a distance”-believers that kept us in the limbo, to provide actual evidence for the argued true randomness of the particle and its “up/down spin” behavior while testing.

They were always good with their sophistry, though, to blind others of that deficiency, that gap in their logic.

.

Ultimately, I think its impossible! To truely prove their bizzare, illogical assumptions, by proving the very basis for it: erratic randomness!

For, to truely prove, that THE VERY SAME particle could have gone EITHER WAY in its personal test, you’d have to be able to wind back spacetime itself, to repeat the “same” test with ACTUALLY the same particle under ACTUALLY the same conditions; perfectly the same!

… which obviously is impossible.

.

The rational approach — in the face of this observation — would be to not believe in that extraordinary claim. – to stick with more skeptical assumptions.

.

But, oh well!

What the hell do I know!

I am not part of the in-group, so obviously I am wrong, by merely bringing logic into this (what a weak armament!), where they have expensive laboratories and huge clapping audiences and publishing science magazines! (Oh! The authority!)

.

Anyway…

What do I make of it?

Like the cryptic information, that the test setup for testing spin was spun 120degrees, and for why this or that result was to be expected?

.

– – – – –

.

i) “credo quia absurdus est”; + “god works in mysterious ways”: a mode of rationalizing not all too different from theirs!

.

ii) the throw of a dice, for instance, is not governed by probability distributions but by hard facts; information which are too nuanced and unpredictable (for US!), to properly keep track of [at all times], so the best APPROXIMATION we can give is that of “random chance” and probabilistic description.

The dice being thrown however, is a hard fact, with a 100% determined outcome, depending on all the cold hard (elusive) factors that play into it…

– like: which side was up? What’s the force of the throw? – the direction? What’s the air pressure, humiddity, weight of the dice & its proper balance, height of the throw and lenght of the table? Are there any obstacles to bounce off of?

Looked at, thoroughly, ANY idea of “chance”, of “randomness”, really only boils down to a subjective lack of information! (And the approximations we make to work around that deficiency!). In fact: it may be tge most basic definition of random chance: subjective lack of information for the observing mind making the predictions! (Nature doesn’t care about our predictions, though…)

Honestly: their approach to “explaining” (credo quia absurdus est) how nature works reminds me more of a bookie (!) giving odds for the outcome of a sports-game for us all to place bets; which is very different from the people in the know, like the football experts, the medical staff, the weather gods or the cold hard ball-physics. An approxination good enough for the given task — but no more than that!

QM, to me, does not seem to be concerned with how the world (!) works; – but only with how QM works (iii). A presumably small, but most important difference, with huge consequences…

.

iii) I think there was a quote to that very same extend; from the same guy whom the infamous, snarky “don’t tell god what to do with his dice!” has (erroneously or not?) been attributed to. But can’t quite remember his words right now.

Anyway: he DIDN’T EVEN TRY to disprove that “god does not play dice”; but having a snarky, ignorant comeback is all the rage with certain people! Its all about image and perception (and popularity and publication and fame)… not the mundane, boring work of skeptics and nature philosophers…

All good stuff, CG. To be honest, I missed the press release. I have a Telegraph subscription, and their science coverage is pretty good. But I don’t think they covered this. If so, I think that says something about the state of physics. But I could be wrong about that, particularly since I’ve been under continuing pressure on other fronts of late. I have had my head down, and didn’t hear about this from other sources. Yes, I will write about it. With a heavy heart, because it is pseudoscience masquerading as bona-fide physics, and because Alfred Noble has done far more harm with his prizes than he ever did with his dynamite.

.

PS: I’m John Duffield, see https://physicsdetective.com/about/.

CG : ” Hear ! Hear ! ”

Bring forth all of the ” weak armament of pure logic ” you and John can muster up !

@G.R.+L

Not sure if sarcasm… (i)

Anyway: who’s John?

I associate “Dunnfield”(???) and remember a name like that posting on this page; I assumed (75%certain) that he is “physics detective” himself, but never got around confirming that.

The fact that I read both names co3nting under one and the same article, there’s room for doubt.

.

– – – – –

.

I am not a studied physicist, so “the usual suspects” would quickly dismiss anything I have to say on the matter… And then, as a German, English is not my first language (more like “1.5th” than “2nd” though, as I am a former exchange student who still thinks in english, regularly; and when I read books on physics I usually prefer the english originals; like with Hollywood movies;… and many youtubers.) so the ironic/unironic Intention of “hear hear” always confuses me!

@G.R.+L

Not sure if sarcasm… (i)

Anyway: who’s John?

I associate “Dunnfield”(???) and remember a name like that posting on this page; I assumed (75%certain) that he is “physics detective” himself, but never got around confirming that.

The fact that I read both names co3nting under one and the same article, there’s room for doubt.

.

– – – – –

.

I am not a studied physicist, so “the usual suspects” would quickly dismiss anything I have to say on the matter… And then, as a German, English is not my first language (more like “1.5th” than “2nd” though, as I am a former exchange student who still thinks in english, regularly; and when I read books on physics I usually prefer the english originals; like with Hollywood movies;… and many youtubers.) so the ironic/unironic Intention of “hear hear” always confuses me!

CG : I am sincere in my comments, I agree with you 100% . I also am not a scientist, nor an academic for that matter. Just an Every Day Joe living here in rural Amerika.

John : AKA Farsight, AKA The Physics Det. is my go-to source on the subject physics,as well as most things science. The only subject he is consistently confused about is Amerikan Politics. LOL 😆!

I also started to reread John’s book PHYSICS+ with much delight. It’s a great book for laymen like us, but has enough bona-fide math and science to hopefully change the minds of academic audiences who prescribe to the Standard Theory. I have always found his historical research to be meticulous and accurate. Very few other experts can honestly make that same claim.

Why thank you Greg!

.

In essence this website is a development of my self-published Relativity+ dating from 2009, but it’s free.

RE : RELATIVITY +, The Theory of Everything by John Duffield, Caric Press Lmt., ISBN: 978-0-9560978-0-4.

I also gave a stern lecture to my proof- reader, hopefully these embarrassing typos come to an end………..

Nay bother Greg. It’s not embarrassing, these things happen.

Before I forget about it (‘am not here for this specific purpose, though):

there is a certain YT channel that I stumbled across months (?) ago with all sorts of videos on physics and cosmology; it confronted me with a video (and you may want to check it out yourself), which seemed to contain some of the very same paragraphs, verbatim, as if reading from the same source; as if coppying this very texct of yours?

Maybe it just has to do with the original article (?) by O. Consa itself, which you also (?) quoted from (without quotation marks?), so you are both “guilty”, if anything, of repeating his very sentences.

The channel is called “See the pattern” and the video was called “Quantum Electrodynamics is rotten at the core”; it’s almost half an hour long, but you’ll see what I mean very early.

CG: I will take a look.

.

I’m not “guilty” of repeating his very sentences. This whole article is all about giving the guy kudos for a great job well done. Note that he gave a positive comment above.

Is it just a coincidence that your snippet of the Karplus and Kroll paper shows two mathematical errors, one of which will affect the calculation? First off L1 says 3*zeta(3) which should be 2*zeta(3). Still, they have the right numerical result listed.

However L2 is just wrong. The integral equals zeta(3)/4 exactly. The integral does not equal their expression nor their result. They either left out a term or miswrote the infinite series. It should be 0.3005142257898…

So an error of about -0.000051274 then multiplied by 49/3 to be -0.0008374787666. Not sure how much this affects the final calculation.

John: yes, it’s just a coincidence. I didn’t go through the paper in detail, I used it to reiterate Oliver Consa’s point. Gosh I wrote this articel more than three years ago. How time flies.

.