Scott Aaronson is a Computer Science professor at the University of Texas at Austin. See his Wikipedia entry, which says he’s the founding director of UT Austin’s new Quantum Information Center. Aaronson is into quantum computing in a big way, and is a famous blogger on the subject. His blog is called Shtetl-Optimized, and one of his recent posts was called Quantum Information Supremacy. I take an interest in this sort of thing because I have a Computer Science degree, and I know a thing or two about fundamental physics. Hence I thought I’d take a look.

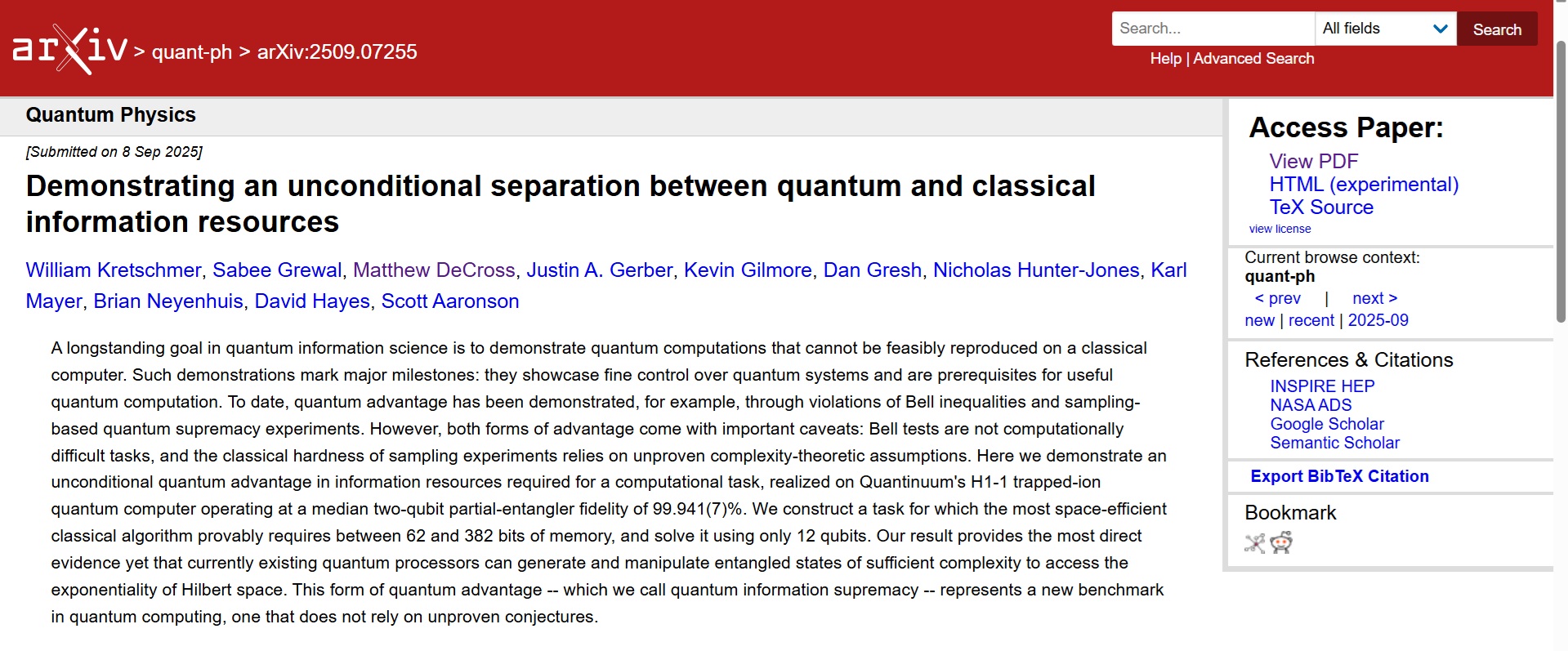

Demonstrating an unconditional separation between quantum and classical information resources

Aaronson’s Quantum Information Supremacy post is about a paper he co-wrote with ten other authors. It’s called Demonstrating an unconditional separation between quantum and classical information resources. Aaronson said he was thrilled about it, and that it’s based on a collaboration between UT Austin and a corporation called Quantinuum. He thanked his Austin colleagues William Kretschmer, Nick Hunter-Jones, and Sabee Grewal, saying Kretschmer led the theory and wrote much of the code, and Hunter-Jones and Sabee Grewal made important contributions too. He also thanked the team at Quantinuum “for recognizing a unique opportunity to test and showcase their cutting-edge hardware, and collaborating with us wild-eyed theorists to make it happen”. It would seem that Aaronson and his UT colleagues did the theoretical stuff, and the Quantinuum guys did the practical stuff. Whatever, Aaronson then gave the abstract of the paper:

Screenshot from the arXiv, see https://arxiv.org/abs/2509.07255

Screenshot from the arXiv, see https://arxiv.org/abs/2509.07255

The abstract started by saying a longstanding goal in quantum information science is to demonstrate quantum computations that “cannot be feasibly reproduced on a classical computer”. It referred to this as called quantum advantage, saying such demonstrations mark major milestones. It also said said quantum advantage has previously been demonstrated through Bell tests and sampling experiments. However it qualified this by saying the Bell tests are not computationally difficult, and the “hardness” of the sampling experiments is unproven. It went on to say the current paper is about a better demonstration of quantum advantage realized on Quantinuum’s H1-1 trapped-ion quantum computer “operating at a median two-qubit partial-entangler fidelity of 99.941(7)%”. The abstract said the team constructed a task which would need between 62 and 382 bits of classical memory, and solved it using only 12 qubits. It then said this was the most direct evidence yet that quantum processors “can generate and manipulate entangled states of sufficient complexity to access the exponentiality of Hilbert space”. The abstract finished up saying “this form of quantum advantage – which we call quantum information supremacy – represents a new benchmark in quantum computing”. Aaronson then finished up his blog post saying he’d be happy to field questions about the paper in the comments section. There were 160 comments, which is good. What’s not so good is that many of them concerned the Many Worlds Interpretation which says we live in a multiverse, and superdeterminism which says we have no free will. Personally I find it strange that some people seem to prefer myth and mysticism to empirical science, but such is life.

A Hilbert space is an abstract mathematical space which is flat and Euclidean, but has more than three dimensions

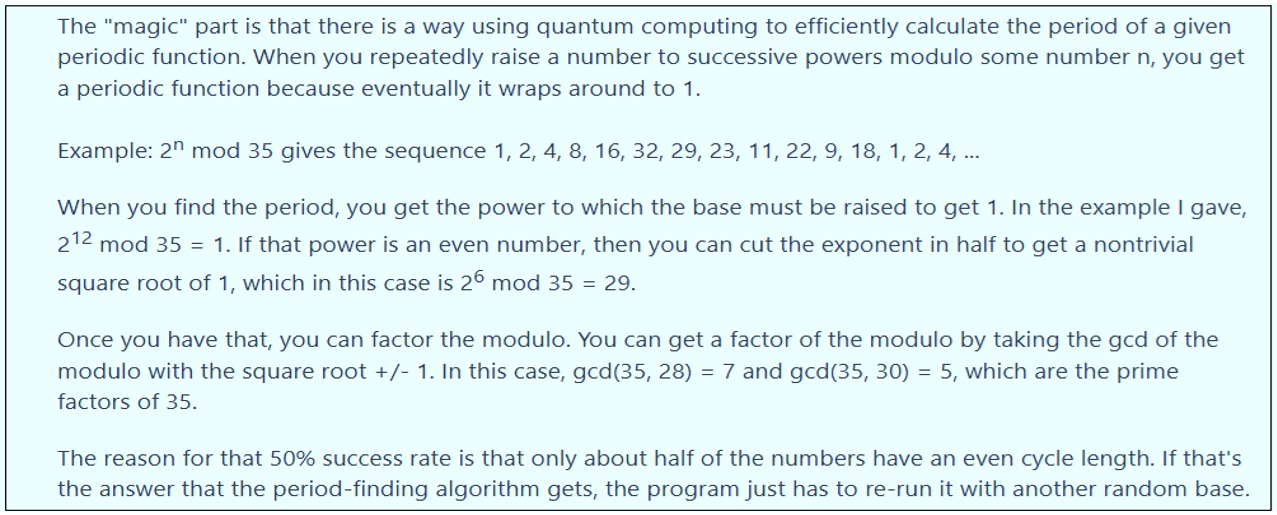

The paper itself looks daunting, because it’s 42 pages long with 97 references. However as Aaronson pointed out to me in his comments, the main part of the paper is only 5 pages long, and the rest is supplementary material. Page 1 started by saying quantum theory postulates that “a system of n particles is represented by a vector in Hilbert space with exponentially many continuous parameters”. I should explain that a Hilbert space is an abstract mathematical space which is flat and Euclidean, but has more than three dimensions. In quantum computing a Hilbert space is said to have 2n dimensions where n is the number of qubits. The idea is that if you add one qubit you double the number of dimensions. The paper then said the goal of quantum computing is “to determine when and how this exponentiality can be harnessed to perform computations that are infeasible for classical computers”. It referred to Shor’s algorithm, which dates from 1994, and said skeptics think the exponential speedup it’s supposed to offer is not be achievable in practice.

Explanation of Shor’s algorithm by minno, see Reddit

Explanation of Shor’s algorithm by minno, see Reddit

It also said at the heart of the skepticism is the belief that “physically realizable quantum systems cannot be engineered to exploit the exponential dimensionality of Hilbert space”. It added that reasons for this skepticism range from minor issues of decoherence and error accumulation, to the major issue of whether quantum mechanics is complete. The latter strikes a chord with me. The paper also said the question of whether Hilbert space is physical has been debated since the early days of quantum mechanics. As per the abstract, it then referred to Bell tests and sampling experiments, and then asked two questions: whether it’s possible to experimentally demonstrate an unconditional quantum advantage, and “whether a quantum device can perform a computation that establishes the exponential dimensionality of Hilbert space as a physically accessible resource”. It then said the answer to both questions of these questions was yes. I was surprised at that myself. I think it’s one thing to say qubits offer an advantage over bits, but something else to say it’s because Hilbert space is real.

This yields an unconditional separation between quantum and classical information resources

But no matter. On page 2 the paper said the team used “the tools of one-way communication complexity” to formulate a computational task for which a classical algorithm requires at least 62 bits of memory. It went on to say the team solved the task with only 12 qubits, and that “this yields an unconditional separation between quantum and classical information resources”. It also said “our result provides direct evidence that today’s quantum hardware can prepare quantum states of sufficient complexity to access the exponentiality of Hilbert space”. Again, there’s that claim that Hilbert space is somehow real. I’m all for thinking outside the box and breaking the rules. For example I think there’s a way to get round conservation of charge. So I’m all for solving tasks in novel ways. For example if you had a tea tray with two sliding sides, you could pour 400 ball bearings on to the tray, then you could jiggle and slide to find that 20 is a factor of 400:

Slide-side tray factoring depiction by me

Slide-side tray factoring depiction by me

This would be an analogue computer of sorts, and if you could contrive it via trapped-ion electronics to emulate Shor’s theorem, that’s great. Then since quantum means how much, I’m happy if you want to call it a a Quantum Processing Unit (QPU) or a quantum computer. But please don’t bullshit me with infinite-dimensional Hilbert space. I wasn’t born yesterday.

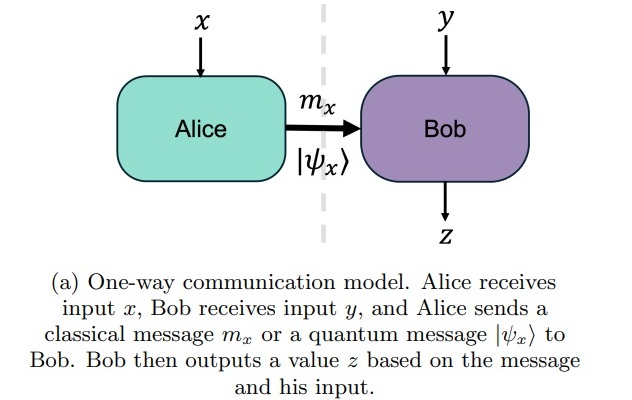

From Communication Complexity to Quantum Information Supremacy

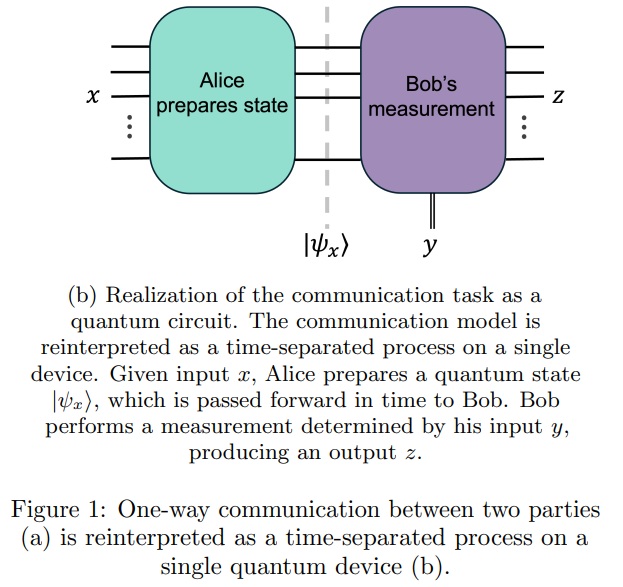

The paper then talked about a “one-way communication task” wherein Alice and Bob “wish to jointly compute a function on their inputs”. Alice receives an input string x whilst Bob receives an input string y. Then Alice sends message mₓ to Bob, who performs a computation to output a correct result f(x, y) = z. The goal is to minimize the length of mₓ. The paper said the challenge “is to identify tasks for which the communication required is significantly smaller than the input size” and “the use of a quantum message |ψₓ⟩ can provably reduce the amount of communication required by an exponential factor”. To paraphrase, Alice could pass her full input string to Bob, but that’s akin to classical computing. The idea is that a shorter message demonstrates a quantum advantage.

Figure 1 part (a) from Demonstrating an unconditional separation between quantum and classical information resources

Figure 1 part (a) from Demonstrating an unconditional separation between quantum and classical information resources

However the paper then changed tack to say instead of Alice and Bob separated in space, they’re in the same place at two different times t₀ and t₁. It said “in this analogy, the communication bottleneck between Alice and Bob becomes a limitation on the amount of information that the device can transmit across the temporal boundary”. My brow furrowed at that, because I’ve previously done some detective work on time. If I have some information right now, I don’t have to transmit it to myself across some “temporal boundary”. There is no temporal boundary. I already have the information. I just sit and wait. Or maybe I don’t wait at all.

Figure 1 part (a) from Demonstrating an unconditional separation between quantum and classical information resources

Figure 1 part (a) from Demonstrating an unconditional separation between quantum and classical information resources

The paper asserts that “the basic premise of quantum information supremacy is to recast a quantum advantage in communication as an advantage in storage”. The trouble with that is that now there is no Alice and Bob. It’s just Alice. Alice receives string x, prepares message |ψₓ⟩, then combines it with a second string y to produce result z. Nevertheless, the idea is that a “quantum resource” needs n qubits to store the message, whilst a “classical resource” needs exponentially more bits. For an analogy, consider the trinary bit, also known as a trit. It can have three states -1, 0, or 1. If you stored your message in trits instead of bits, you’d need fewer trits than bits. And so on.

Improved Quantum-Classical Separations

Rather surprisingly the paper then backtracked from storage to communication. It said quantum advantage in one-way communication has long been known, but “separations are insufficiently strong to achieve nontrivial quantum information supremacy”. I don’t buy that. A spatial separation is a damn sight stronger than a non-existent “temporal boundary”. The paper then said “to remedy this, our chief theoretical contribution is a new one-way communication problem that can be solved using n qubits of quantum communication”. That’s as opposed to circa 2n bits of digital communication. The paper described this new problem as “a distributed version of linear cross-entropy benchmarking (XEB)”. Look it up. XEB is not some real world problem related to factoring or cryptography. It’s “a method for assessing the performance of quantum processors”. That’s not good. Also note that it “has been used as a test for procedures simulating quantum circuits”. Simulating quantum circuits isn’t good either.

A linear cross-entropy benchmarking fidelity is a metric used to evaluate the performance of quantum circuit simulators

On page 3 the paper said “the task asks Alice, who holds a quantum state, and Bob, who holds a quantum measurement, to work together to produce bit strings that are high-probability outputs of Bob’s measurement on Alice’s state”. High probability? What happened to the correct result? The paper then said Alice’s input x is a classical description of some n-qubit quantum state |ψₓ⟩, and Bob’s input y, which is really Alice’s second input, is “a description of an n-qubit measurement in the form of a circuit Uᵧ”. The task is to produce length-n bit strings z “whose distribution, over many repetitions of the problem, achieves high linear cross-entropy benchmarking fidelity”. So Alice gets a digital input, turns it into qubit states, then since there is no Bob, Alice combines that with another digital input to produces a digital output. This is then repeated many times to yield a “high linear cross-entropy benchmarking fidelity”. Which can be spoofed. And is a metric used to evaluate the performance of quantum circuit simulators. Not only that, but the proof is not some clear-cut demonstration like me wiring up a minitron with fewer DILICS than Tom Kilburn thought possible. It’s statistical.

Let Alice’s state be sampled from the Haar measure over n-qubit states

Hence my eyes narrowed as I read on. And I quote: “The quantum protocol for this task is simple: Alice’s message to Bob is |ψₓ⟩, and Bob processes the state by applying Uᵧ and measuring in the computational basis. Assuming that the state |ψₓ⟩ and circuit Uᵧ exhibit sufficiently strong anticoncentration, the FXEB achieved by implementation on a quantum device is well-approximated by the fidelity of the device”. A quantum protocol is a set of rules and methods used in quantum communication. A computational basis refers to the set of standard states used to represent qubits in quantum computing. An anticoncentration refers to how evenly a quantum state spreads across all possible outcomes. Do keep up boys and girls. There’s then a reference to a 7-page 2019 Nature paper Quantum supremacy using a programmable superconducting processor, and to a 3-page appendix H2:

Screenshot from page 34 of Demonstrating an unconditional separation between quantum and classical information resources

Screenshot from page 34 of Demonstrating an unconditional separation between quantum and classical information resources

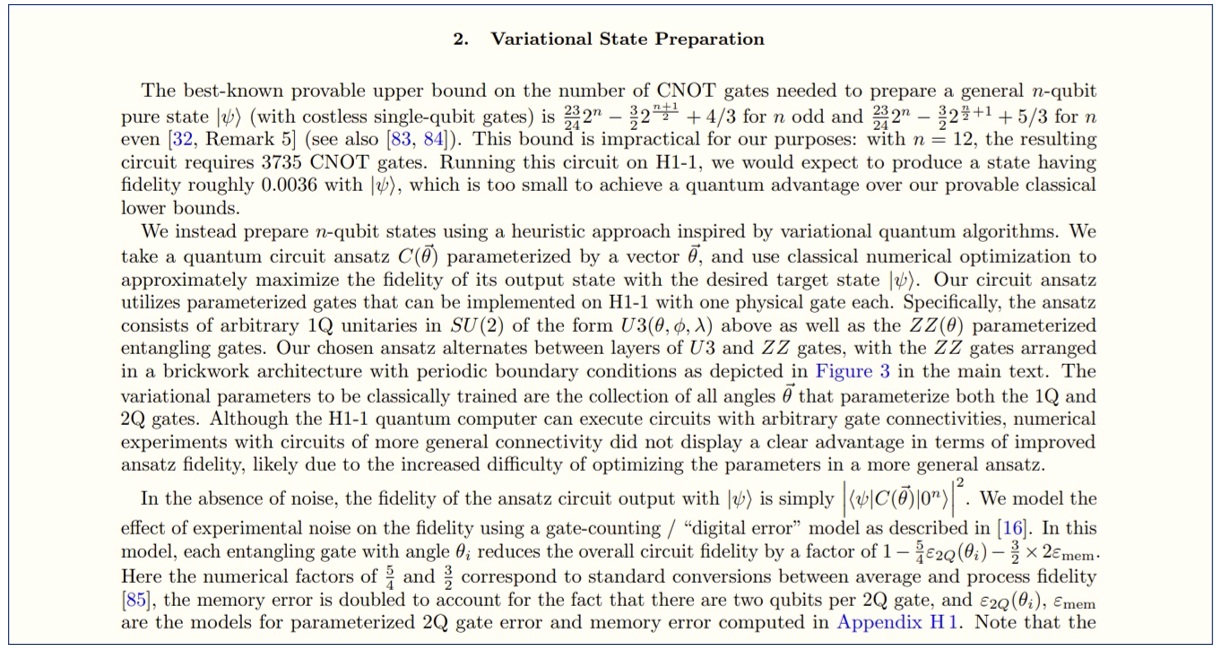

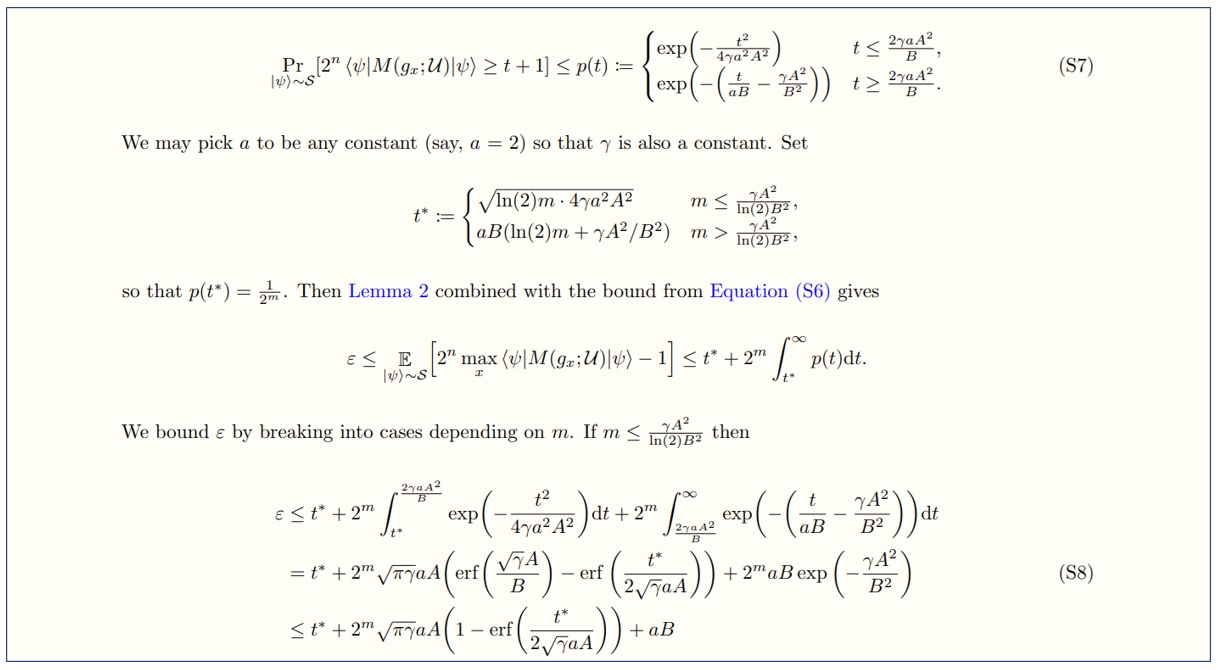

Then there’s this: “Theorem 1: Let Alice’s state be sampled from the Haar measure over n-qubit states, and let Bob’s measurement be sampled independently from the uniform distribution over n-qubit Clifford measurements. Any classical protocol that achieves average FXEB ≥ ε with respect to this input distribution must use at least min{Ω(ε²2n), ε2n−O(√n)} bits of information”. I’m sure you all understand that, don’t you boys and girls? Ah, I see you’re all nodding. Right then, you boy, Powell, step up here and explain it to the rest of the class. LOL, I know where this is going. Anyway, after that there’s then a reference to the 7-page appendix E4, a reference to the 3-page appendix G, and just as I expected, a graph:

Figure 2 from Demonstrating an unconditional separation between quantum and classical information resources

Figure 2 from Demonstrating an unconditional separation between quantum and classical information resources

For the cherry on top, the paper said theorem 1 “witnesses a quantum advantage with as few as n = 7 qubits, compared to n = 9 for the best previously-known separation”. That’s fewer than the number of terms in the theorem 1 expression. But don’t worry boys and girls, because complementing theorem 1, a classical protocol would need circa ε2n/ln(2)²n bits, and it’s all explained in the 5-page appendix F. What’s not to like?

Implementation and Experimental Results

After that, the paper talked about the implementation and the results. It said they used the Quantinuum H1-1 quantum computer “which provides 20 fully connected trapped-ion qubits”. It referred to Quantum Charge Coupled Device (QCCD) architecture. This is said to be analogous to a CCD camera. The CCD camera “stores and processes imaging information as movable electrical charges in coupled pixels”. The QCCD “stores quantum information in the internal state of ions that are transported between different processing zones using dynamic electromagnetic fields”. See the Wikipedia article on trapped-ion computers for background information. Also see appendix H where you can read about Ytterbium qubit ions and Barium coolant ions, plus hyperfine states along with Bloch sphere rotations and fluorescence measurement. The moot point is that the Quantinuum H1-1 quantum computer has 20 qubits. Meanwhile there are 256 gigabytes of storage on my Samsung A35 phone. That’s over 2 trillion bits. Spot the difference.

Generating exact Haar-random states is infeasible

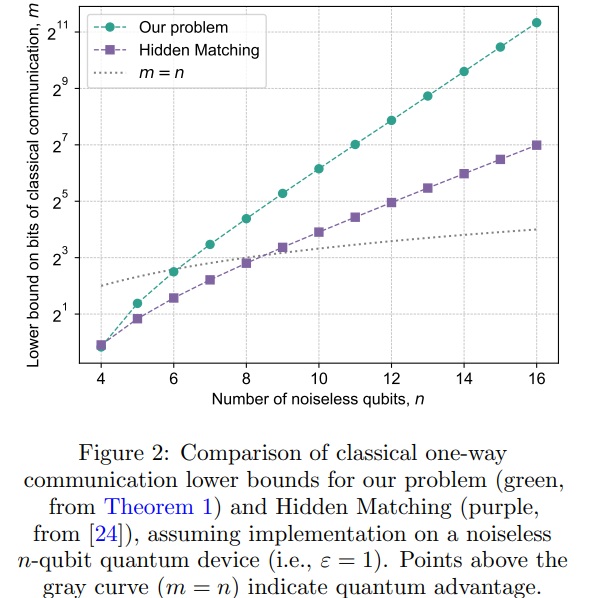

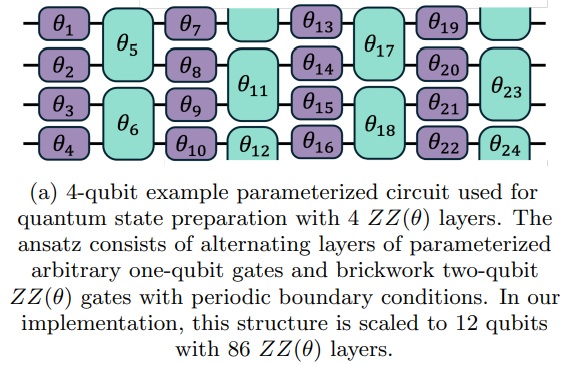

The paper talked about the H1-1 two-qubit gates being a source of errors, along with memory errors incurred during qubit idling, transport, and cooling. There are of course no such issues in digital computers. The paper also talked about noise, then Haar-random states derived from a Haar measure. It said generating exact Haar-random states is infeasible on near term quantum hardware, so they “variationally train a parameterized quantum circuit to approximate Alice’s Haar-random message”. The paper then it talked about “brickwork ansatz” circuit consisting of one-qubit gates and two-qubit gates, with 86 two-qubit layers in total. The one-qubit gates are purple, the two-qubit gates are green:

Figure 3 part (a) from Demonstrating an unconditional separation between quantum and classical information resources

Figure 3 part (a) from Demonstrating an unconditional separation between quantum and classical information resources

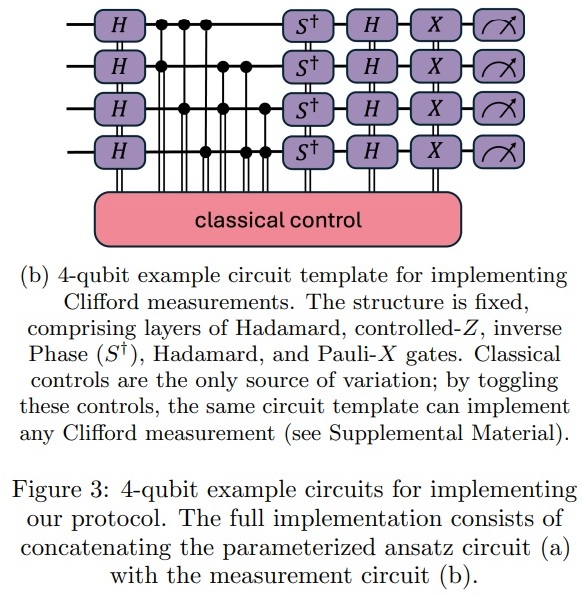

It then talks about Clifford measurement. As I’m sure you all known that’s a type of randomized quantum measurement that uses a circuit of Clifford gates to estimate properties of a quantum system. The paper also talks about classical control bits used by a field-programmable gate array (FPGA), which is a classical processor. This uses the classical control bits to trigger QPU gate operations via RF-modulated laser pulses routed through optical fibres.

Figure 3 part (b) from Demonstrating an unconditional separation between quantum and classical information resources

Figure 3 part (b) from Demonstrating an unconditional separation between quantum and classical information resources

As Craig Gidney pointed out in the comments, these control bits are not included in the 7-qubit count, and it’s impossible to build a quantum computer without a control system. He also pointed out that 5 bits of storage is sufficient to evaluate complex functions by using enormously long sequences of instructions. But never mind such minutiae, the paper then said the experiment requires a source of true randomness to generate inputs x and y, otherwise theorem 1 doesn’t hold. This doesn’t sit well with what they said about training “a parameterized quantum circuit to approximate Alice’s Haar-random message”. The paper also said the experiment achieved an XEB fidelity ε of 0.427 computed from 10,000 independent trials. It then said “applying theorem 1 with n = 12 and ε = 0.427 implies that any classical protocol achieving the same average score would require at least 78 bits of memory”. The paper then talks about five standard errors, which is similar to the fabulous Higgs boson’s famous five sigma. It said if this was applied, then 62 bits would be needed.

Discussion and Future Work

The final section of the paper is a round-up. The authors said “we performed a task using 12 qubits on Quantinuum’s H1-1 trapped-ion quantum computer, for which any classical protocol achieving comparable performance provably requires at least 62 bits of memory”. They said this establishes “an unconditional experimental separation between quantum and classical information resources”. They also said their results are provable and permanent, and that their competitors have not properly demonstrated quantum advantage. They also referred to the first Bell test experiments, saying they contained loopholes that were only closed in later experiments. Then they said “our experiment is subject to similar caveats that future work may address or eliminate”. They went on to say a skeptic could say that the experiment “did not maintain a true temporal separation between Alice and Bob’s inputs”. Or challenge whether the QPU truly realizes a 12-qubit Hilbert space. Or whether a highly-entangled 12-qubit state is a low-entanglement state in a larger space.

The Emperor’s New Clothes.

What they didn’t say is that a skeptic would point out that the abstract says the team constructed a task and solved it using only 12 qubits, but that’s not actually what they did. Or that a skeptic would point out that there is no Bob, so there is no communication. But there are lies, damn lies, and then there are statistics. And that a 20-qubit experiment should provide scientific evidence, and should not rely upon a mathematical “proof”. Especially when the latter is comprised of five theorems concreted together into a 20-page Westeros wall of defensive mathematical handwaving:

Screenshot from page 19 of Demonstrating an unconditional separation between quantum and classical information resources

Screenshot from page 19 of Demonstrating an unconditional separation between quantum and classical information resources

A skeptic would point out that there are such things as blarney, bullshit, sophistry, obscurantism, and moonshine. A skeptic would take you through the history and point out that Bell cooked up up a smoke-and-mirrors mathematical “proof” using probabilities adding up to one, then used this to claim that classical physics must yield a straight-line result rather than a cosine curve. A skeptic would point out that the cos² θ is there because Malus’s Law applies when you send photons through polarizers A and B regardless of whether the light goes through them like →→ or like this ←→. A skeptic would point out that the Nobel Bell tests performed by Clauser and Freedman and by Aspect et al, used cascade photons that were emitted at different times, and so were not entangled at all. Hence a skeptic would point out that there is no quantum entanglement. Then he’d tell you a horror story about quantum computing and the quantum quacks, and fairy tales about quantum echoes and The Emperor’s New Clothes. All together now boys and girls: the king is in the altogether, the altogether, the altogether, he’s altogether as naked as the day that he was born.

I know absolutely nothing about computing, but this is one classroom boy who’s head is spinning from all the hyped up semantics : not nodding in agreement!

Westoros size walls 🧱 and lies,damn lies and made up statistics indeed !

This paper sounds much more like a click bait, marketing ad, rather than a truly serious peer reviewable tome.

Noted, Greg. I suspect the people who get funding for this kind of stuff are very keen to persuade people that they’re doing useful productive work. And some people just lap it up, even when they don’t understand it at all. See this comment.

Pump and dump!

Huh. More like pump and pump.

Thank you for hard work, detective. Hope reason will prevail in these matters.

Thanks Tony, but don’t hold your beath. Quantum computing has been kicking around since 1982. That’s 43 years and counting. The people who have staked their reputations on it will never admit that the fundamentals just aren’t there, and that it will never ever deliver. What I particularly dislike is that this so-called “quantum technology” sucks up funding that could be put to better use. Such as research into optical computing, which uses displacement current. Displacement current is more fundamental than conduction current.

Yes sir, right I can explain that! The degenerated Knuter valve is connected to the virtual framistat leading to the conclusion that more funding would certainly lead to exponential quantum improvement…

LOL, spot on Steve! I hope you noticed that you got a mention in the article! I used to be in Mr Newton’s classroom. Now it would seem that I’m the supply teacher who fills in with no qualifications .

Fresh off the presses :

Read “China solves ‘century-old problem’ with new analog chip that is 1,000 times faster than high-end Nvidia GPUs” on SmartNews: https://l.smartnews.com/p-6v75pD3O/0Gx86o

That looks like good stuff Greg, thanks. I think there’s plenty of room for analog chips in computing.

More scary stuff. The Ohio State U. is also chock full of Mandarin speakers too. One even got a Noble Nobel Golden Calf Medal a few years back.

Read “3 more Chinese researchers at University of Michigan charged over alleged effort to smuggle biological materials: ‘Alarming pattern’” on SmartNews: https://l.smartnews.com/p-6x8BU30k/ecAoOX

That’s not good, Greg. What biological materials? I read about it on another source but it didn’t say. It mentioned petri dishes which suggested bacteria, or maybe fungus or virus, but what, exactly?

Another interesting computer tidbit, and the main reason I’ve never used ticktock and immediately delete from memory on all new phones/devices!

Read “Norway discovers that its Chinese electric buses can be remotely disabled” on SmartNews: https://l.smartnews.com/p-6w1QORCU/jJWC4g

That is creepy Greg. It just goes to show. Here’s the story in another outlet:

https://www.carscoops.com/2025/11/norways-public-buses-can-be-shut-down-remotely-from-china/

Thanks for the heads up.

A very interesting topic touched here! When I worked in electron paramagnetic resonance the problem of simulation of spectra was connected with inversion of very big matrix. The most discussed method was a trick like Tikhonov regularization (replacement of real matrix to very similar but much easier to invert). However, it was also shown that all the present day standard algorithms like used in Matlab are not working properly because the computer errors (rounding errors) are accumulating and even for small matrix like 100X100 the calculation of the inverse matrix is very poor (in a sense you multiple A and A inversed and instead of diagonal matrix got the junk – diagonal and off-diagonal elements are almost the same). But if somebody is not lazy and go deeper to understand the origin of errors it is possible to create a better algorithm working under home made program (working away from Windows completely to prevent some of the rounding errors). In such algorithm the same laptop easily inverses 10000X10000 matrix almost perfectly. Just for fun – calculation of inverse matrix is essential for code breaking (and quantum computer was designed for that purpose from the very beginning). That was in year 2002, 23 years ago. Most probably for some practical applications today’s computers are still not fully optimized and if the deeper diving into code is made the problem may be solved today without any quantum supremacy.

Interesting stuff Dmitriy. Thanks. I learn something new every day. I do think it’s rather said that Microsoft came to dominate the personal computer software market.

Then I’ll commence to do more digging on this said subject, John.

W.T.F. !

Evidently the most recent U.of Michigan scandal concerned genetically altered ROUNDWORMS ? Puke !!!

This article concerns foreign invasive fungus spores, which is a truly diabolical scheme indeed!

https://abcnews.go.com/Politics/2-chinese-nationals-charged-smuggling-potential-agroterrorism-fungus/story?id=122454213

Thanks Greg. Fungus spores? I am reminded of The Last of Us: I enjoyed season 1, but thought season 2 was not good and stopped watching.

“The Last of Us is an American post-apocalyptic drama television series created by Craig Mazin and Neil Druckmann for HBO. Based on the video game franchise developed by Naughty Dog, the series is set decades after the collapse of society caused by a mass fungal infection that transforms its hosts into zombie-like creatures.”

I’ll definitely will check out The Last of Us. The Defiance series is another better than average production :

https://en.wikipedia.org/wiki/Defiance_(TV_series)

Hey Physics Detective, I think the best word to sum up all this quantum computing is: “Contrived”. They are setting up these artificial parameters to get some random and meaningless result, and then concluding that a normal computer would take much longer or need more computational power to achieve this ‘result’. If you flip a coin 1 million times, by chance you could get some lucky pattern, but that result is not reproducible or useful. Total quackery as far as I’m concerned, unless they can solve a tangible and meaningful real world problem.

.

If it’s based on entanglement then it is going to be BS, because entanglement does not exist. That is the fundamental lie which the entire quantum house of cards is built on. If you believe in entanglement then you believe in action at a distance and thus non-locality, which means you do not believe in reality and are delusional.

I agree, Andy. I started going through this paper with an open mind. I thought OK, there’s no entanglement, but maybe there’s some way they were using the trapped ions as some kind of “superbit” that could somehow record multiple states at once. Such as orientation, spin, polarity, et cetera. Or that maybe there’s some way they’ve managed to rig up an analogue computer, like my slide-side tray. But no. The multi-dimensional Hilbert space was the first red flag, and after that it was all downhill all the way. There was no substance to it. Every time I hoped to get my teeth into something the nitty gritty was buried somewhere else in some obtuse appendix. The problem turned out to be a pseudo-problem, and it was all will o’ the wisp stuff that evaded empirical analysis. And deliberately obtuse to boot, just like Bell’s paper. Oddly enough at one point when I was writing this article I included the sentence “I could do better than ε = 0.427 just tossing a coin”. However that didn’t make the final cut. As for entanglement, I don’t feel much ill will to the people who believe in it. People like to believe in their myth and magic. I don’t feel so charitable to the people who make unjustified claims about their achievements. Especially when the funding they then attract could be going towards something useful like optical computing using displacement current, which is more fundamental than conduction current. Here in the UK the government is throwing billions at “quantum technologies”, and it’s all money down the drain when real physics is going begging.

Yes totally agree John. You know so many quantum papers still go back to Bell’s inequality as the ground truth and proof of entanglement. Someone needs to completely debunk Bell’s inequality in a major pubic and high profile way, otherwise it will continue to just be cited as some undisputable proof. Bell’s inequality just boils down to a somewhat counterintuitive optical effect and law of polarizers, it has nothing to do with quantum mechanics or entanglement.

One of the few examples of a reaction which MUST generate a positive and negative pair only is pair production from two photons. A positron and electron are generated, there cannot be two positrons, or two electrons. So you could model this pair as ‘entangled’ because one is positive and one is negative. According to quantum mechanics the particles could be in either state until you measure them, and then you send one to one side of the universe, and send the other particle to the other side, and then measure one and instantaneously across the universe the other particle flips to become the opposite! It is so fucking stupid and nonsensical, I laugh out loud at anyone who could believe something so fucking stupid. Really complete fantasy and childish thought pattern. The particles are already in their states, you just dont know which one is which. The measurement doesn’t change jack shit. I cannot believe that so many physicists are so deluded and in such a complete brainwashed state to believe such garbage. Of course some dimwit is going to claim that the positron and electron are not truly entangled and only the magical entangles particles will actually do this, but that is just a cult and complete crock of shit.

All points noted Andy. Sadly though, this is not the only example of bad science in contemporary physics. Sometimes I feel like I’m living in a dark age.

So John, what’s your opinion on all this. It’s not that I’m totally against new fission reactors here in the U.S. myself ; I’m just against Ohio’s truly shitty historical nuclear record.

Read “Why the US is upset Rolls Royce will build a small nuclear power station in Wales” on SmartNews: https://l.smartnews.com/p-6zIHsize/cxTJvt

I read it here Greg: https://inews.co.uk/news/politics/us-upset-rolls-royce-nuclear-station-wales-4037596

That Smartnews website wanted me to download an app via a QRcode. Meh.

It sounds like it’s a somewhat hyped story. Like it’s trying to make a big deal about the UK and US governments not getting on. When the UK government doesn’t get on with anybody.

Lots of interesting info to digest here, folks. I’m also off to Wikipedia, to reconfirm how little I remember about Wigner Crystals.

https://www.sciencedaily.com/releases/2025/11/251116105625.htm

Noted, Greg. In other news, I was very pleased to see this video about the Williamson / van der Mark electron: https://www.youtube.com/watch?v=hYyrgDEJLOA. As I speak it has 1,513 comments. It is so sad they are no longer with us.

Just watched that video, it is good and well explained. This is exactly the type of video needed for many people to watch and comment and hopefully make them actually think about it. This theory needs to go mainstream and be analyzed, further developed, and tested. Nice!

I couldn’t agree more, Andy. IMHO the issue is that it means that the Standard Model of particle physics is wrong on multiple counts. The question you might then ask is how did they discover the W boson, the Z boson, and the Higgs boson if these things do not in fact exist?

Anyway, John Williamson told me that he and Martin wrote the paper in 1991, and spent 6 years trying to get it published. They were turned down by just about every journal, and in the end settled for the low-impact Annales de la Fondation Louis de Broglie. John also said the paper was thereafter “studiously ignored”, that he was warned “don’t rock the boat or you’ll never make full professor”, and that journals refused to publish their later papers. He was particularly annoyed with Nature. Maybe I will have a look on my old laptop and see if I can find an email or two.

What we need now is for somebody like Sabine Hossenfelder to give the Williamson van der Mark model some publicity. I don’t think she will. I think she knows about it already, and despite presenting herself as some kind of white knight champion of physics, all she wants to promote is herself. I look forward to being shown to be wrong about that, whereupon I will eat humble pie, grovel, prostrate myself, and apologies profusely. You could try mentioning it to her.

More bad news about possible Chinese Trojan Horses. However, the entire Crypto/Bitcoin universe can’t collapse fucking fast enough to suit me !

Read “Homeland Security thinks Chinese firm’s Bitcoin mining chips could be used for espionage or to sabotage the power grid — Bitmain probed by U.S. gov’t over national security concerns” on SmartNews: https://l.smartnews.com/p-6DyhkVRC/wXLr5D

Bitcoin mining. FFS. I am living in a dark age.

WAVES :

Read “One of the World’s Craziest Waves Explained by the Guy Who Found It” on SmartNews: https://l.smartnews.com/p-6MDb7V1S/quJjuV

I had no intentions of posting under this heading, but here I are…..

After watching and analyzing, this video offers up beautiful examples of vortices within vortices within waves 👋!!!

It would make a cool screen saver too.

Nowadays all of the Nuclear Energy push : is to support Quantum Computing/Crypto/Bitcoin Mutant Multiverses !

This following article is highly alarming, but to be expected. The State of Ohio has a long and dirty nuclear history of it’s own. The Feds and the State already F-ed Over my parents generation of Atomic Workers, so why not dip into that well again?

Source: 614NOW

https://search.app/rZcXJ

Of course my favorite True Life Tale is of Building #470,Area B,WPAFB,Dayton,Ohio. Look it up on your version of Google Earth.

Sigh, And it’s all for nothing, Greg. Sigh. I truly am living in a dark age.